Best LLM for Translation: An In-Depth Expert Guide

Large Language Models (LLMs) have revolutionized AI-driven translation, enabling organizations to communicate across borders more effectively than ever before.

With global markets expanding rapidly, the demand for the best LLM for translation—offering precise, culturally nuanced, and scalable solutions—is at an all-time high.

Advances in LLM technology now produce results that often rival or surpass human translators—from automating customer support to translating technical content.

However, selecting the best LLM for translation in 2025 requires understanding the capabilities, use cases, and long-term scalability of various models.

This article provides a comprehensive overview of the current landscape, key evaluation criteria, top models, and how innovative solutions like OneSky Localization Agent utilize multiple LLMs to deliver high-quality, scalable localization at speed.

The Rise of Large Language Models in Translation

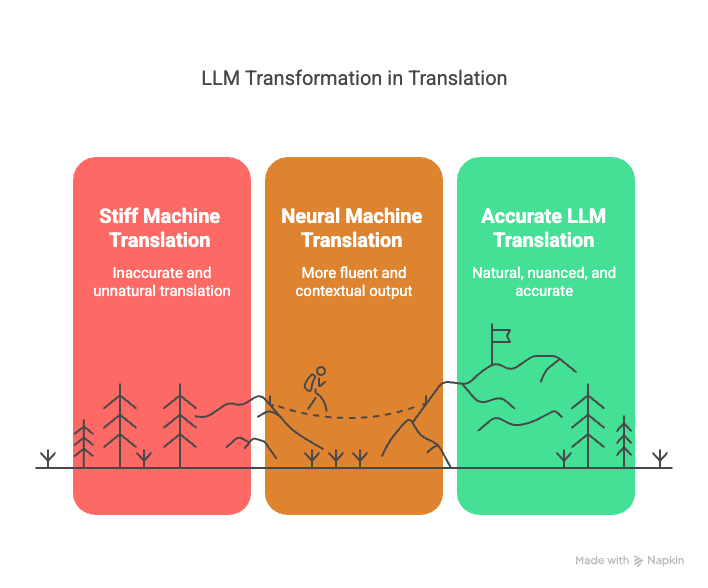

The journey of AI-driven translation has been remarkable over the past decade.

Traditional machine translation systems, largely rule-based or statistical, laid the groundwork but often produced stiff, less natural outputs.

The advent of neural machine translation (NMT) marked a significant step forward, introducing models that could generate more fluent and contextually appropriate translations.

However, in 2025, the landscape has shifted dramatically with the emergence of Large Language Models (LLMs)—powerful AI architectures trained on vast, diverse datasets encompassing multiple languages, domains, and styles.

These models, exemplified by innovations from leading research labs and technology companies, are capable of understanding context at an unprecedented scale, capturing idiomatic expressions, cultural nuances, and technical terminology with remarkable accuracy.

Why Are LLMs Transforming Translation?

1. Contextual Depth

Unlike earlier models limited to short-term context, LLMs process extended segments of text—sometimes entire documents—allowing them to deliver translations that maintain coherence and preserve meaning across lengthy passages.

2. Cultural Nuance & Style Preservation

LLMs can interpret idioms, slang, and stylistic cues, resulting in translations that feel natural and culturally appropriate, reducing the risk of miscommunication.

3. Domain Adaptability

Whether translating medical research, legal contracts, or marketing copy, LLMs can adapt to specialized terminology, making them ideal for industry-specific applications.

4. Multilingual Support

Modern LLMs support hundreds of languages, including low-resource languages, enabling organizations to reach diverse markets without extensive retraining.

5. Integration with Automated Workflows

These models can be integrated into complex localization pipelines, supporting continuous delivery, quality assurance, and collaboration through API-driven processes.

This evolution signifies a fundamental shift from viewing machine translation as a separate, isolated task to embedding it within intelligent, autonomous systems that can learn, adapt, and improve over time.

Key Criteria for Choosing the Best LLM for Translation

Selecting the optimal Mega-Language Model in 2025 depends on understanding several crucial factors.

Here’s a guide to what you should evaluate:

1. Translation Quality

- Accuracy & Fluency: Does the model produce correct, natural-sounding translations?

- Contextual Understanding: Can it interpret idioms, references, and tone?

- Cultural Sensitivity: Does it respect cultural nuances to avoid misinterpretation?

2. Language and Domain Coverage

- Supports your required languages—whether major or low-resource.

- Excels across your content domains—legal, medical, technical, marketing.

3. Speed and Scalability

- Handles large volumes with minimal latency.

- Supports real-time translation for dynamic environments.

4. Customisation & Fine-Tuning

- Adaptable to your specific terminologies, style guides, and branding.

- Allows incremental training to improve domain-specific vocabulary.

5. Integration & Compatibility

- Seamless API or SDK compatibility with your existing tools.

- Fits into established workflows for automation and continuous localization.

6. Data Privacy and Ethical Use

- Complies with GDPR, CCPA, and other regulations.

- Mitigates biases and ensures transparency in decision-making.

Leading LLMs for Translation in 2025

The field has expanded with several powerful models, each optimized for different translation needs.

Here’s an overview of the top contenders:

| Model Name | Core Strengths | Typical Use Cases | Languages Supported | Customization Features |

|---|---|---|---|---|

| OpenAI GPT-4 Turbo | Exceptional contextual understanding; versatile in languages | General translation, content generation | Broad language support, including low-resource languages | Fine-tuning available via API |

| Google Gemini 1.5 | Long-context handling; enterprise integration | Large documents, customer service automation | Over 100 languages, excellent support for major languages | Domain-specific fine-tuning |

| Anthropic Claude 3 | Safety-focused; coherent long-form translation | Support content, documentation | Major and some low-resource languages | Style and tone customization |

| Mistral Mixtral 8x7B | Open-source, highly customizable influence | In-house pipeline and research | Wide language support, flexible training options | Fully customizable models |

| Meta NLLB-200 | Excels in underrepresented languages | Low-resource language translation | Supports over 200 languages | Fine-tuning for specific low-resource languages |

| DeepSeek V3 | Domain-only, expert-level translation in technical fields | Medical, legal, technical content | Mainstream languages; domain-specific adaptation | Custom domain training available |

| Qwen 2.5-Max | Cost-efficient; privacy-compliant | Cost-sensitive and privacy-sensitive apps | Major languages, especially Chinese support | Moderate fine-tuning options |

Note: The right choice depends on your language scope, privacy requirements, and content type.

For instance, organizations focusing on low-resource languages should prioritize models like Meta NLLB-200, while enterprises needing long documents translated at high speed might prefer Google Gemini 1.5.

Comparison of Open-Source vs. Proprietary LLMs for Translation

When selecting a Large Language Model for translation, organizations often face a choice between open-source and proprietary solutions.

Each offers distinct advantages and challenges:

Open-Source LLMs

Examples:

BLOOM, Mixtral, Qwen, and GPT-based models released by communities on platforms like Hugging Face.

Pros:

- Cost-Effective: No licensing fees; ideal for startups or organizations with tight budgets.

- Customizability: Full control over training, fine-tuning, and deployment.

- Transparency: Open models allow insights into architecture, training data, and limitations—enhancing trust.

- Flexibility: Easily integrate with internal systems or modify for specific needs.

Cons:

- Technical Expertise Required: Deployment and optimization demand skilled data scientists and engineers.

- Resource Intensive: Training or fine-tuning models can require significant computational power.

- Support & Reliability: Lacks dedicated customer support; updates depend on community contributions.

Proprietary LLMs

Examples:

GPT-4 Turbo, Google Gemini, Anthropic Claude, and similar commercial solutions.

Pros:

- Performance & Reliability: Usually offer cutting-edge accuracy, optimized for diverse languages and domains.

- Ease of Use: Ready-to-deploy APIs with minimal setup, ideal for rapid integration.

- Support & SLAs: Dedicated technical support and guaranteed uptime, crucial for enterprise-critical projects.

- Continuous Updates: Regular improvements, security patches, and feature enhancements.

Cons:

- Cost: Often involve ongoing licensing fees, especially for high-volume or enterprise use.

- Less Control: Limited ability to customize underlying models or data.

- Data Privacy Concerns: Sensitive content must be managed carefully to meet compliance standards.

Which Should You Choose?

Your decision depends largely on your organization’s technical capability, budget, and control needs.

Open-source models excel in flexibility and cost-efficiency but require expertise, whereas proprietary solutions prioritize ease of use and performance for enterprise-scale projects.

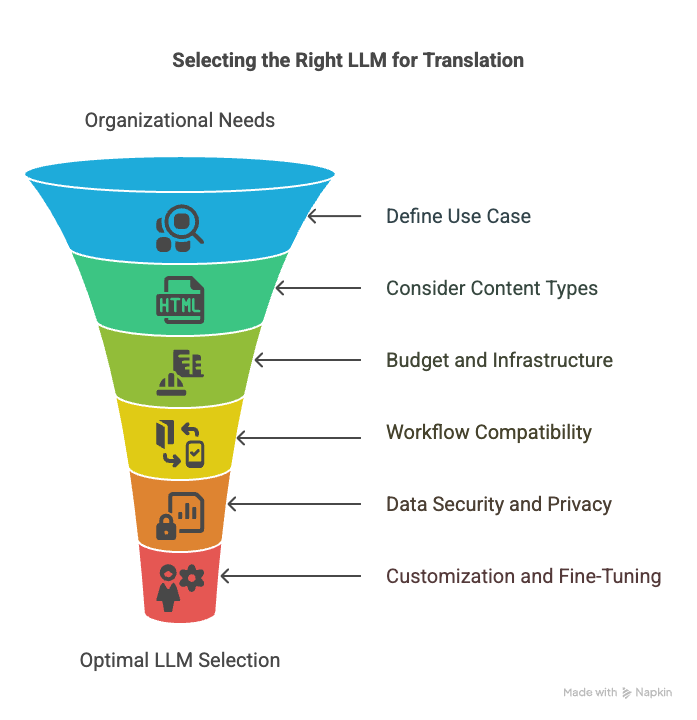

How to Select the Right LLM for Your Translation Needs

Choosing the best LLM depends on your organization’s specific goals, content, and workflow environment.

Here are crucial questions to guide your decision:

1. Define Your Use Case

- Enterprise Localization: Do you need bulk, consistent translations for websites or apps?

- Content Creation: Is focus on marketing materials, blogs, or social media?

- Low-Resource Languages: Do you work with underrepresented languages needing specialized models?

2. Consider Content Types

- Legal & Medical: Require high precision; models with domain adaptation.

- Technical & Scientific: Prefer models trained on technical data.

- Marketing & Creative: Need models good at tone, style, and idiom handling.

3. Budget and Infrastructure

- Open-Source vs. Proprietary: Cost-effective solutions like Mistral or Qwen for self-hosted, customizable models.

- Cloud-based APIs: Models like GPT-4 Turbo or Google Gemini for minimal setup but ongoing costs.

- On-Premise Deployment: For sensitive data, look for models that support local hosting.

4. Workflow Compatibility

- Ensure smooth API integration into your existing tools: TMS, CMS, or custom apps.

- Support for automation, batch processing, and real-time translation.

5. Data Security and Privacy

- Prioritize models with strong compliance guarantees, especially for sensitive or regulated content.

- Check for bias mitigation and explainability features.

6. Customization & Fine-Tuning

- Can you train the model further on your terminology and style?

- Does the platform support incremental learning for continuous improvement?

Our Insider Tips:

Align your choice with your core needs—whether scalability, linguistic scope, domain accuracy, or regulatory compliance.

Multi-LLM strategies, combined with human oversight, often provide the best results, especially for high-stakes content.

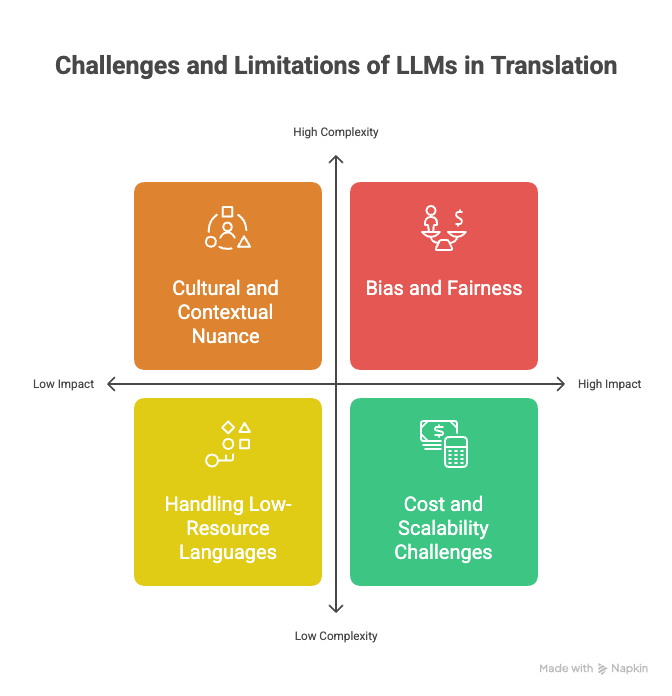

Challenges and Limitations of Current LLMs in Translation

While LLMs have revolutionized translation in 2025, they are not without their imperfections.

Being aware of these challenges helps organizations deploy AI solutions more effectively:

1. Bias and Fairness

- Inherent Biases: Many models reflect biases present in training data, which can lead to stereotypical or offensive outputs.

- Mitigation Efforts: Continuous efforts are needed to audit model outputs and refine training data to foster fairness.

2. Cultural and Contextual Nuance

- Subtle Differences: Translating idioms, humor, or culturally-specific references remains difficult.

- Risk of Misinterpretation: Poor handling of nuance can lead to miscommunication or offense, especially in sensitive content.

3. Handling Low-Resource Languages

- Limited Data: Many languages, especially indigenous or local dialects, lack sufficient data for high-quality training.

- Advances Needed: Ongoing research aims to improve translation quality for these languages, but gaps still exist.

4. Cost and Scalability Challenges

- Operational Expenses: Advanced models often require significant compute resources, increasing costs.

- Scaling Difficulties: Handling extremely large volumes or real-time translation with high accuracy can be resource-intensive.

5. Transparency and Explainability

- Black-Box Nature: Many models lack transparent reasoning, making it difficult to understand why a specific translation was generated.

- Trust & Compliance: Transparent, accountable AI is crucial for regulated industries like legal and healthcare.

While LLMs are powerful, deploying them effectively requires managing these limitations: biases, cultural sensitivity, language support, cost, and transparency.

Combining human expertise with AI can mitigate many of these challenges, leading to more reliable and responsible translation workflows.

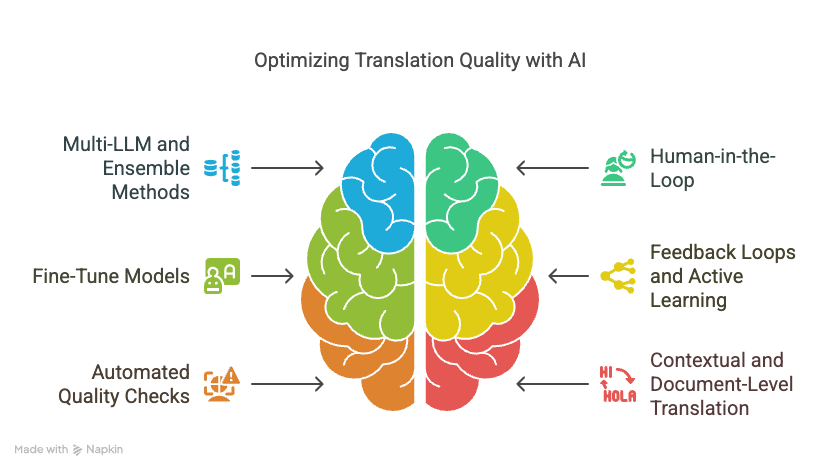

Strategies for Optimising Translation Quality

To maximize the benefits of LLMs in translation, organizations are adopting a multi-faceted approach that combines the strengths of AI with human expertise.

Here are key strategies:

1. Employ Multi-LLM and Ensemble Methods

- Combine Multiple Models: Use different LLMs for various languages or content types. This approach leverages each model’s strengths.

- Ensemble Techniques: Aggregate outputs from multiple models to improve accuracy and consistency, especially in complex or critical content.

2. Incorporate Human-in-the-Loop

- Post-Editing: Professional translators review and refine AI outputs—crucial for legal, medical, and high-stakes content.

- Quality Assurance: Human oversight ensures cultural appropriateness, correctness, and tone consistency.

3. Fine-Tune Models for Specific Domains

- Customised Training: Adapt models with your terminology, style guides, and content-specific data.

- Continuous Learning: Regular updates based on new data improve accuracy over time.

4. Use Feedback Loops and Active Learning

- Collect Feedback: Gather user or translator feedback on translations.

- Iterative Improvement: Use this data to retrain and refine models, ensuring they stay aligned with evolving language use.

5. Implement Automated Quality Checks

- Consistency & Style: Tools that verify adherence to style guides or terminology standards.

- Context & Accuracy: Validation steps that compare AI output with translation memories or glossaries.

6. Leverage Contextual and Document-Level Translation

- Document Context: Encourage models to analyze entire paragraphs or documents rather than isolated sentences.

- Benefits: Better preservation of tone, terminology consistency, and contextual relevance.

In Practice

Modern localization workflows often integrate AI with human review, utilizing specialized tools that facilitate editing and quality assurance.

This hybrid approach ensures high-quality, scalable translation aligned with brand voice and audience expectations.

Case Studies & Success Stories

Real-world examples illustrate how organizations leverage AI and LLMs for successful translation and localization improvements:

Case Study 1: SaaS Company Accelerates Global Launch

A SaaS provider needed to localize their platform into multiple languages quickly. They adopted a multi-LLM architecture integrated into their workflow.

Results:

- 60% reduction in localization turnaround time.

- Consistent quality across 10+ languages.

- Cost savings of approximately 40% compared to traditional translation processes.

- Seamless updates for new features through automated continuous localization.

Case Study 2: Multinational Retailer Enhances Customer Support

A global retailer aimed to improve their multilingual chatbot. Using AI-driven translation with multi-LLM orchestration, they scaled multilingual support rapidly.

Results:

- Enabled real-time translation in 15 languages.

- Significantly improved customer satisfaction scores.

- Reduced support costs by 25%.

- Maintained high translation quality, leveraging human post-editing for critical interactions.

Case Study 3: Low-Resource Language Expansion

A nonprofit organization focused on indigenous languages used open-source models fine-tuned with local data to translate educational content.

Results:

- Empowered local communities with native-language educational resources.

- Achieved high accuracy despite limited initial data.

- Cost-effective deployment due to open-source customization.

How OneSky’s Advanced AI Approach Integrates the Best LLMs

At the forefront of AI-powered localization, OneSky employs a sophisticated, multi-layered approach to leverage the best of what LLM technology offers.

OneSky Localization Agent (OLA) exemplifies how combining multiple models and autonomous AI workflows produces superior translation quality, speed, and consistency.

Multi-Agent AI & Autonomous Workflow Orchestration

- Multi-LLM Strategy: OLA integrates several leading models—each selected for strengths like fluency, domain expertise, or low-resource language support.

- Automated Decision-Making: The system dynamically selects the appropriate model(s) for each task, based on content type, language, and complexity.

- Workflow Automation: Once triggered, OLA orchestrates the entire localization process—preprocessing, translation, review, and final delivery—without human intervention while maintaining full transparency.

Ensuring Quality, Consistency, and Speed

- Contextual Input Preparation: The system preprocesses strings, enhancing input quality and providing rich context to models.

- Cross-Model Comparison: Multiple models produce translations that are evaluated and ranked, ensuring the selection of the best output.

- Continuous Monitoring: Project managers can track progress in real-time via a centralized dashboard, observing how each AI agent performs at every stage.

Supporting Enterprise-Scale Localization

- Scalable & Flexible: OLA supports large-volume projects involving thousands of assets, continuously updating to include the latest models and techniques.

- Transparency & Control: Full visibility into AI decisions, model selections, and translation quality metrics fosters trust and compliance.

- Human Oversight: When needed, professional linguists review the AI-generated content, ensuring accuracy in high-stakes translations such as legal or medical documents.

A Practical Example

Suppose a company needs to localize a new feature of their SaaS app into multiple languages.

OLA:

- Analyzes source content, detects language nuances.

- Selects the optimal models for technical accuracy and cultural appropriateness.

- Translates using multiple AI agents, compares results, and refines.

- Presents drafts for review or post-editing.

- Delivers consistent, high-quality localized content aligned with brand voice—automatically and efficiently.

Read also: Understanding Agentic Workflow: Revolutionizing AI Decision Making

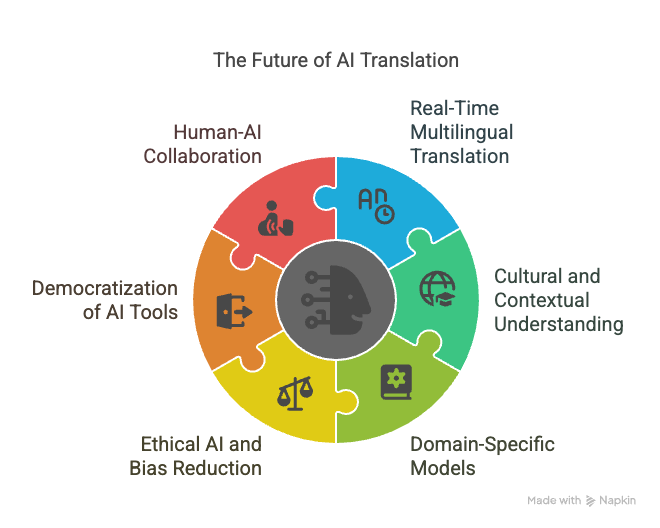

Future Outlook: The Next Frontier in AI Translation

The evolution of LLM technology continues at a rapid pace, with innovations set to redefine the boundaries of multilingual communication in the years ahead.

Here’s what we can expect for the future of AI-driven translation:

1. Real-Time, Multilingual, Multimodal Translation

- Simultaneous Multi-Language Support: Advances are pushing toward models capable of translating multiple languages instantly during conversations or live broadcasts.

- Multimodal Integration: Future models will seamlessly combine text, speech, and images—ensuring fluid communication across various media formats.

2. Deepened Cultural and Contextual Understanding

- Enhanced Cultural Nuance: Training data will include more culturally relevant idioms, slang, and contextual clues, allowing AI to produce even more natural translations.

- Adaptive Style & Tone: AI will tailor outputs to match local idioms, dialects, and audience preferences dynamically.

3. Domain-Specific & Personalized Translation Models

- Specialized Expertise: Making use of domain-specific data, models will excel in legal, medical, or technical fields.

- Personalization: Translation engines will learn individual or brand-specific jargon, tone, and style, resulting in more tailored content.

4. Ethical AI & Bias Reduction

- Fairness & Inclusivity: Efforts to address biases will be more integrated, providing fairer and more responsible AI outputs.

- Transparency & Explainability: Growing emphasis on AI models that can justify their translations, building trust in sensitive sectors.

5. Democratisation of Advanced AI Tools

- Accessibility: Open-source models and low-cost APIs will make high-quality translation accessible to smaller organizations and even individual users.

- On-Device Processing: Future models may operate directly on user devices, enhancing privacy and on-demand translation without reliance on external servers.

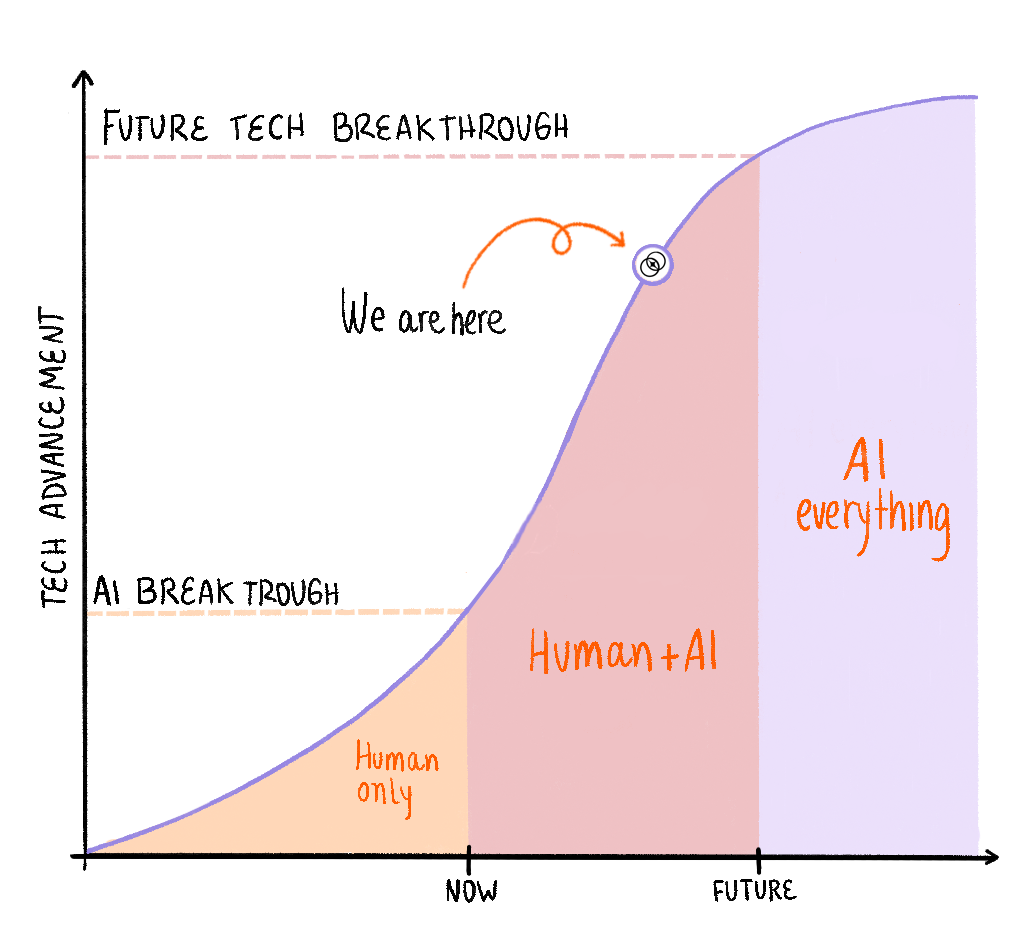

The Human-AI Collaboration

Despite these exciting developments, the role of human expertise remains vital.

AI will continue to augment professional translators, editors, and localization managers, handling routine and large-scale tasks while humans focus on cultural, creative, and high-stakes aspects.

In essence, AI translation in 2025 and beyond will be characterized by seamless, context-rich, multilingual communication—bringing the world closer together.

Read also: AI Translation: What It is, Top 10 Tools, What Future Holds

Future Thoughts

AI-powered translation continues to evolve rapidly, with the best LLMs transforming global communication—handling complex terminology and cultural nuances alike.

Selecting the right model depends on your content, language scope, and operational needs.

Combining multiple models and hybrid workflows remains key to achieving high quality and scalability.

As AI advances, solutions that blend machine intelligence with human insight will be essential for reliable, inclusive global communication.

Ready to embrace the future?

Discover how OLA leverages cutting-edge LLMs and autonomous workflows to accelerate your international expansion.

Contact us today for a free trial and see the future of localization in action.

Frequently Asked Questions (FAQs)

Q1: What is the most accurate LLM for translation in 2025?

A: The most accurate models tend to be those that combine extensive training data with advanced fine-tuning capabilities.

Currently, models like GPT-4 Turbo and Google Gemini 1.5 are highly regarded for their contextual understanding and fluency across multiple languages.

Q2: Can LLMs handle low-resource languages effectively?

A: Yes.

Models like Meta’s NLLB-200 are specifically designed for low-resource languages, supporting over 200 languages and dialects with high-quality translations, making them ideal for diverse global markets.

Q3: How do LLMs compare to traditional machine translation tools?

A: LLMs offer significantly improved fluency, context-awareness, and cultural sensitivity than traditional rule-based or statistical MT systems.

They produce more natural translations that better capture idioms and stylistic nuances.

Q4: Are LLMs suitable for specialized industries like legal or medical translation?

A: When fine-tuned on domain-specific data, LLMs can excel in specialized fields.

However, high-stakes content typically benefits from human review and post-editing to ensure compliance and accuracy.

Q5: How important is model customization and fine-tuning?

A: Customization allows models to adapt to your specific terminology, style, and brand voice, resulting in more consistent and relatable translations.

Many leading models support fine-tuning for optimal domain relevance.

Q6: Is data privacy a concern with LLMs?

A: Yes, especially in regulated industries.

Modern models, including those provided by reputable providers, adhere to strict privacy standards such as GDPR. Always verify compliance before deployment.

Q7: What role does human translators play when using AI models?

A: AI significantly speeds up the translation process, but for critical or high-stakes content, human review remains essential to ensure nuance, tone, and accuracy are maintained.

Q8: How do multi-LLM workflows improve translation quality?

A: Combining multiple models allows systems to select the best output according to context, domain, or language pair, resulting in higher accuracy, consistency, and reliability at scale.

Q9: Can AI translation models support real-time multilingual communication?

A: Yes.

Advances in LLMs support instant, real-time translation across multiple languages, enabling live conversations, meetings, and multimedia content to be accessible globally.

Q10: What’s the best way to get started with AI translation for my organization?

A: Assess your specific language, content, and operational needs.

Consider partnering with a provider that integrates multi-LLM strategies and autonomous workflows—like OneSky—to ensure quality and scalability.

Written by

Written by

Written by

Written by

Written by

Written by