LangChain Alternatives: The Top Frameworks for Building Modern AI and Autonomous Workflows

In the fast-evolving world of AI development, LangChain has established itself as a leading framework for orchestrating large language models (LLMs) into complex, multi-component applications.

Its modular design and extensive integrations have made it the go-to solution for passionate developers looking to build conversational AI, retrieval systems, and autonomous agents.

However, as the AI landscape matures, many teams are seeking alternatives that offer better customization, faster iteration, or that are tailored specifically to their use cases.

Currency, complexity, scalability, and ease of use drive this need.

Whether you’re a prompt engineer, data scientist, enterprise architect, or low-code developer, choosing the right tool can significantly impact your project’s success. In this article, we will walk you through all the LangChain alternatives you should know.

Adding to this context, OneSky Localization Agent (OLA) emerges as a complementary solution, particularly for organizations needing multilingual AI applications, providing seamless, AI-powered content localization integrated within their workflows.

- What is LangChain?

- Why Look Beyond LangChain?

- Overview of Top LangChain Alternatives

- In-Depth Exploration of Top LangChain Alternatives

- Enhancing Multilingual AI Workflows: Why Specialized Localization with OneSky Matters

- How to Choose the Right Framework

- Final Insights

- Frequently Asked Questions (FAQ)

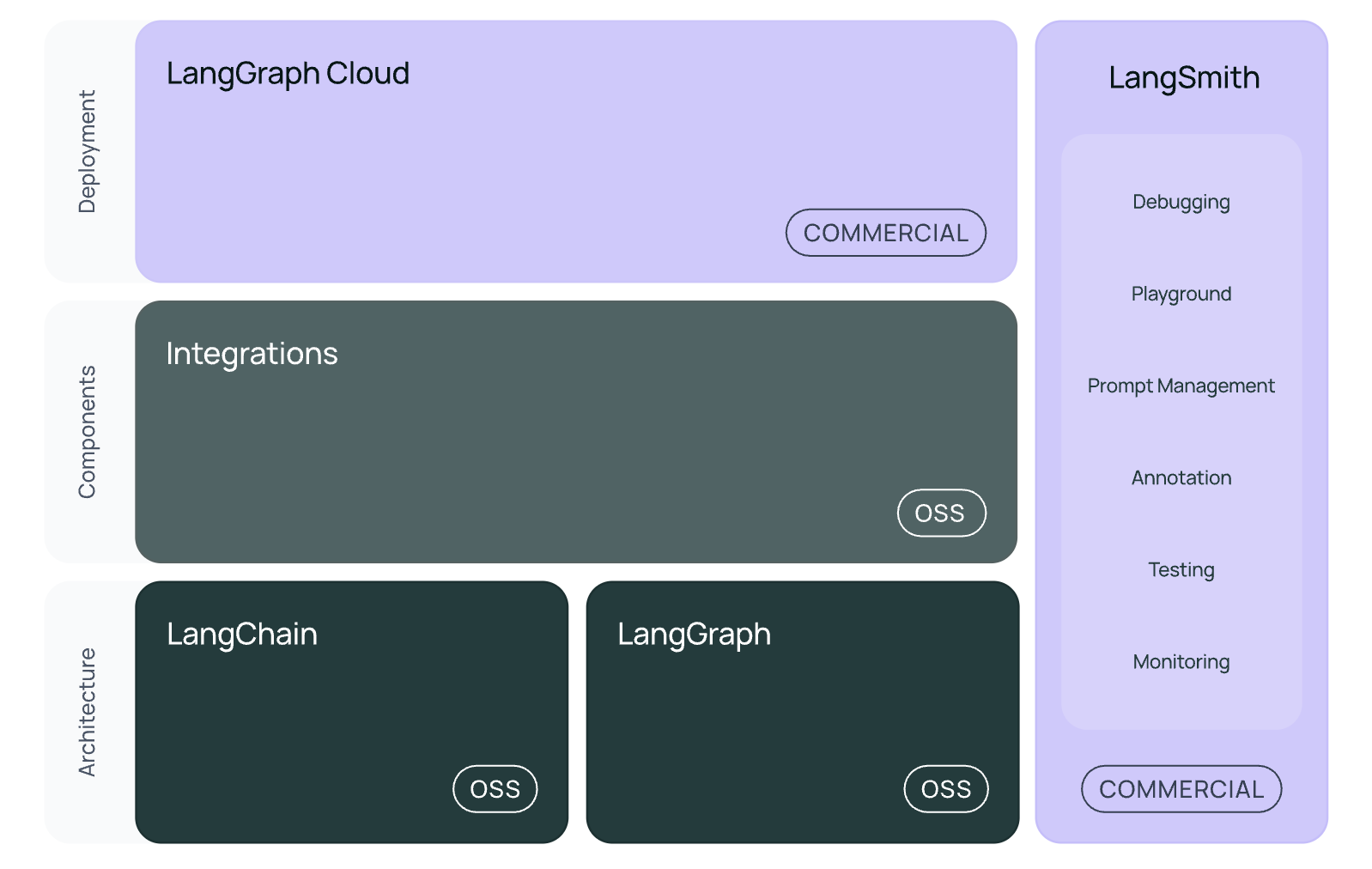

What is LangChain?

LangChain is an open-source framework designed to connect and chain together components like prompts, language models, data sources, and tools, enabling developers to create sophisticated AI applications with minimal hand coding.

It offers:

- Modular chains and pipelines

- Prebuilt connectors for APIs and data stores

- Support for autonomous agents

- Infrastructure for prompt management and debugging

Why Look Beyond LangChain?

LangChain has been instrumental in enabling rapid development and experimentation with LLMs, but its complex architecture and default abstractions can hinder:

- Faster prototyping and iteration — especially for teams wanting to test new models or workflows quickly.

- Deep customization — such as fine-tuning prompts, debugging, or managing data workflows precisely.

- Enterprise scalability — when security, compliance, and multi-user collaboration are priorities.

- Multilingual and localization support — critical for global applications, requiring reliable translation workflows.

This need for flexibility drives organizations to consider specialized or more adaptable solutions, each emphasizing different aspects like data control, debug-ability, or global content management.

Overview of Top LangChain Alternatives

| Tool / Platform | Core Features | How It Differs from LangChain | Ideal Use Cases | Pricing / Availability |

|---|---|---|---|---|

| Vellum AI | Prompt testing, version control, environment orchestration | More in-depth prompt control and fine-tuning capabilities | Precision prompt engineering | Varies |

| Mirascope | Validations, structured prompt management, debugging tools | Advanced validation leveraging Pydantic, version tracking | Prompt optimization, output validation | Free / Open-source |

| Galileo | Error analysis, model fine-tuning, observability | More detailed error insights, model diagnostics | Debugging, model improvements | Varies |

| AutoGPT | Fully autonomous agents, multi-step workflows | Highly customizable for complex multi-agent automation | Autonomous multi-scenario AI bots | Varies |

| LlamaIndex | Data pipelines, retrieval-augmented generation, knowledge integration | Focused on data and content orchestration, indexing capabilities | Large knowledge bases, enterprise data apps | Open-source / Free |

| Haystack | Search, semantic retrieval, NLP pipelines | Advanced retrieval and document management capabilities | Search engines, question-answering systems | Free / Open-source |

| Flowise AI | Low-code drag-and-drop workflows, rapid deployment | User-friendly for non-developers, minimal coding required | Rapid prototyping, non-technical teams | Free / Open-source |

| Milvus | High-performance vector similarity search | Focus on scalable, real-time semantic search | Large datasets, embedding search | Open-source / Paid options |

| Weaviate | Distributed vector search, knowledge graphs | Enhanced enterprise features, scalability | Content search, enterprise knowledge management | Varies / Free tier available |

| OpenAI APIs | Direct access, custom interactions | Lightweight, full control, more flexible than middleware | Custom model workflows, bespoke integrations | Pay-as-you-go |

| IBM watsonx | End-to-end enterprise AI, security, compliance | Complete enterprise AI platform with extensive tools | Large-scale, compliant, secure deployments | Varies |

| Amazon Bedrock | Managed deployment, multi-model support | Cloud-native, scalable application deployment | Cross-region, high-availability AI services | Pay-as-you-go |

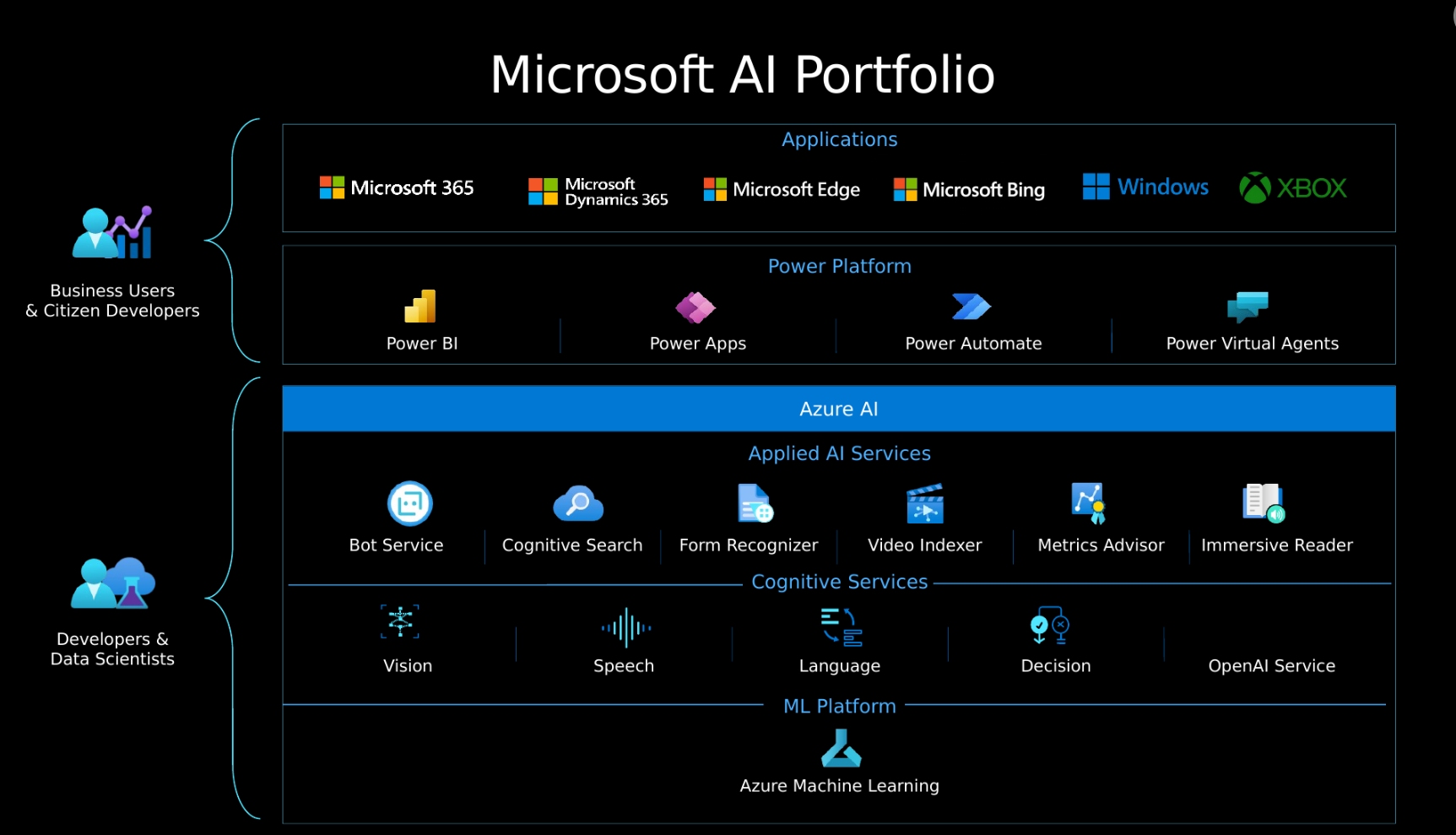

| Microsoft Azure AI | Cloud hosting, integrated workflow orchestration, security | Strong enterprise ecosystem integration | Hybrid cloud, large orgs, enterprise-grade AI | Pay-as-you-go |

In-Depth Exploration of Top LangChain Alternatives

1. Vellum AI

Overview:

Vellum AI is a prompt engineering platform dedicated to designing, testing, and managing prompts at scale.

It offers a playground-style environment where teams can refine prompts iteratively and maintain version control, making it especially useful for teams focused on prompt optimization.

What Makes Vellum Different:

Unlike LangChain, which provides a framework for chaining components, Vellum concentrates specifically on prompt control.

It provides tools for precise prompt tuning, validation, and scalability without requiring complex pipeline setup, targeting prompt engineers and NLP researchers.

Use Cases:

- Fine-tuning prompts for chatbots and NLP applications.

- Scaling prompt testing across different models.

- Rapid iteration for conversational AI.

Pricing:

Depends on usage; tiered plans for large-scale experiments.

| Aspect | Details |

|---|---|

| Strengths | Precise prompt control, versioning, scalable testing |

| Ideal For | Prompt engineering, research labs, NLP tuning |

| Comparison to LangChain | Focused exclusively on prompt generation and control |

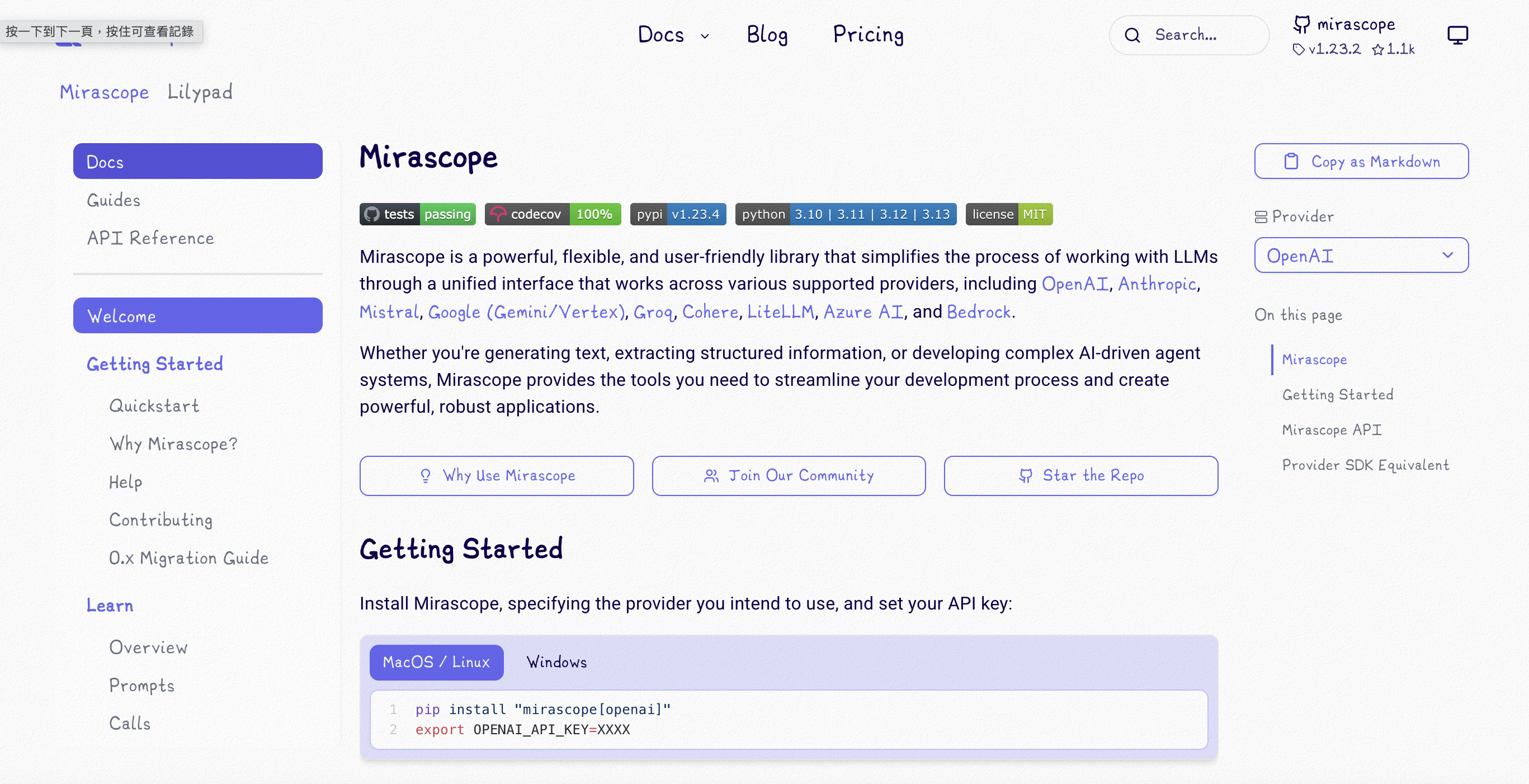

2. Mirascope

Overview:

Mirascope is a tool for structured prompt management focused on validation, version control, and debugging.

It leverages Pydantic for output validation, helping developers ensure prompt accuracy and reduce errors during deployment.

What Makes Mirascope Different:

While LangChain emphasizes chaining components, Mirascope provides granular control over prompt structure, output validation, and traceability.

It excels in high-reliability environments where prompt correctness and output consistency are crucial.

Use Cases:

- Validating prompts against compliance requirements.

- Managing prompt version history for audits.

- Debugging complex prompt responses in production.

Pricing:

Open-source and free.

| Aspect | Details |

|---|---|

| Strengths | Validation, traceability, output correctness |

| Ideal For | Production systems, compliance, high reliability |

| Comparison to LangChain | Focused on validation and debugging, not chaining workflows |

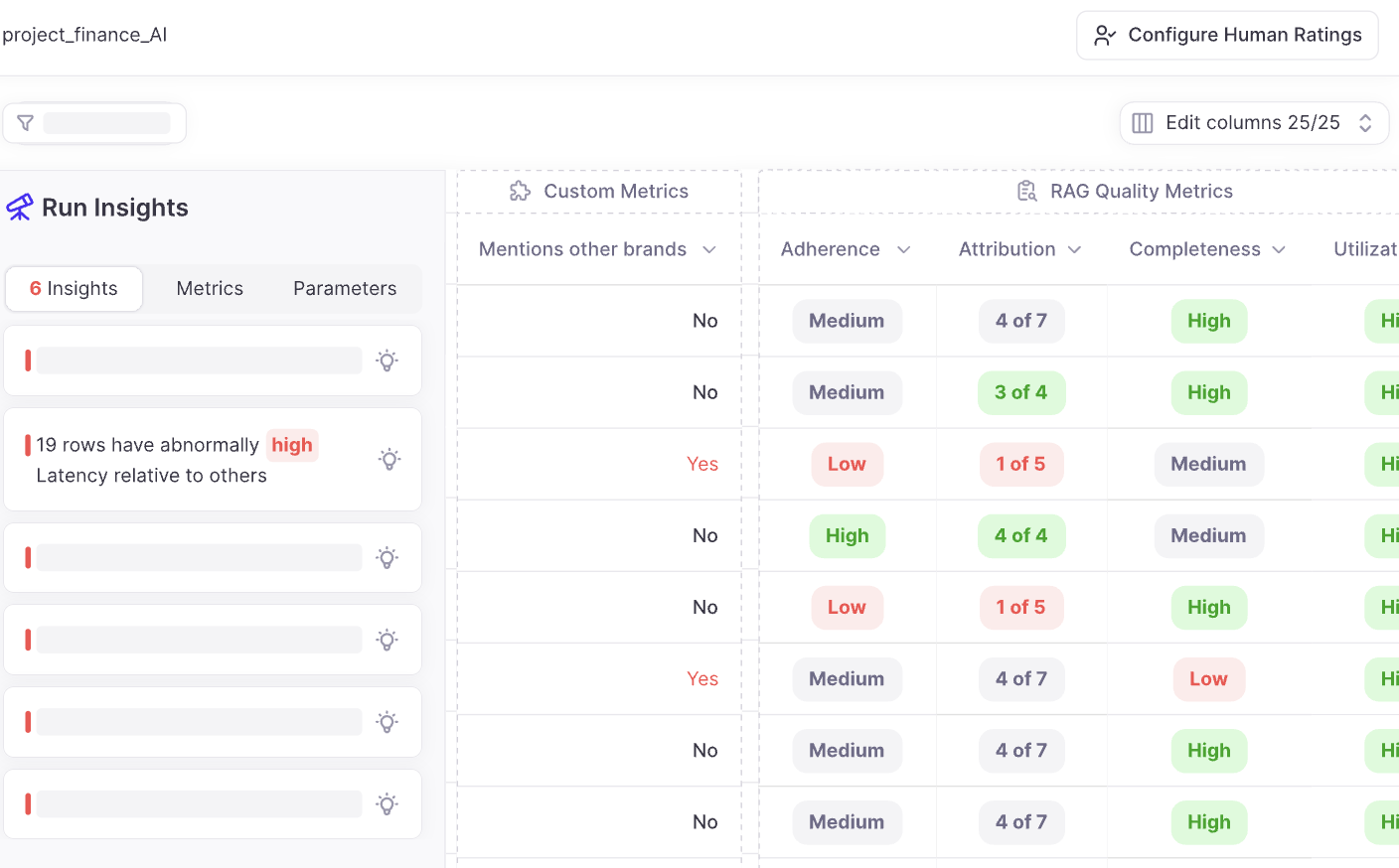

3. Galileo

Overview:

Galileo offers detailed debugging, error analysis, and performance diagnostics for large language models.

It provides insights into model errors, dataset quality, and bottlenecks, helping teams improve model accuracy and reliability.

What Makes Galileo Different:

Unlike LangChain’s abstraction layers, Galileo focuses on transparency and diagnostics, providing deep insights into model behavior and errors.

It is essential for teams committed to AI quality and iterative improvement.

Use Cases:

- Diagnosing model errors in production.

- Fine-tuning models with detailed error reports.

- Improving dataset quality through anomaly detection.

Pricing:

Variable; offers enterprise plans and individual licensing.

| Aspect | Details |

|---|---|

| Strengths | Deep diagnostics, transparency, error insights |

| Ideal For | Model debugging, error reduction, quality improvement |

| Comparison to LangChain | Emphasizes diagnostics over chaining and pipeline building |

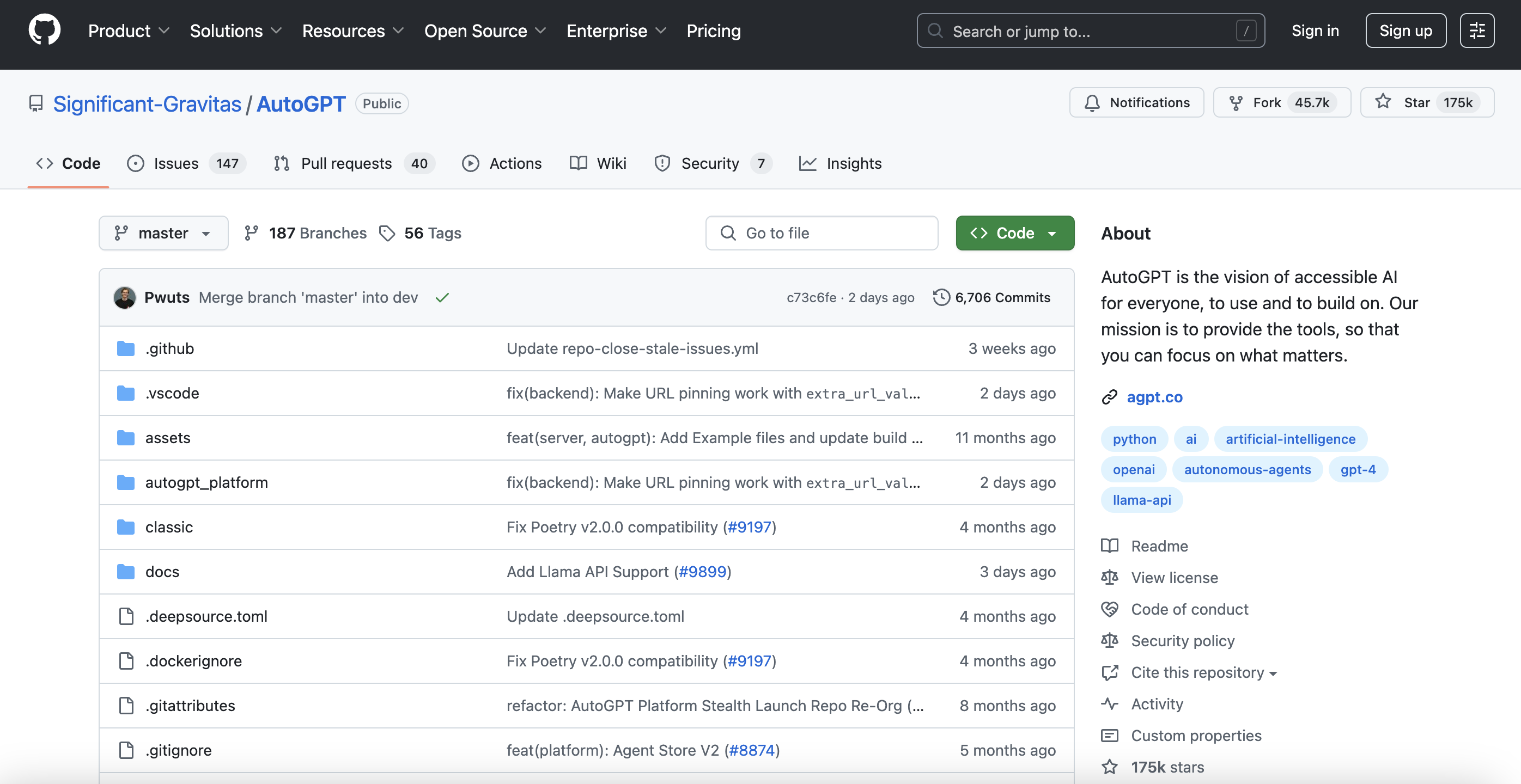

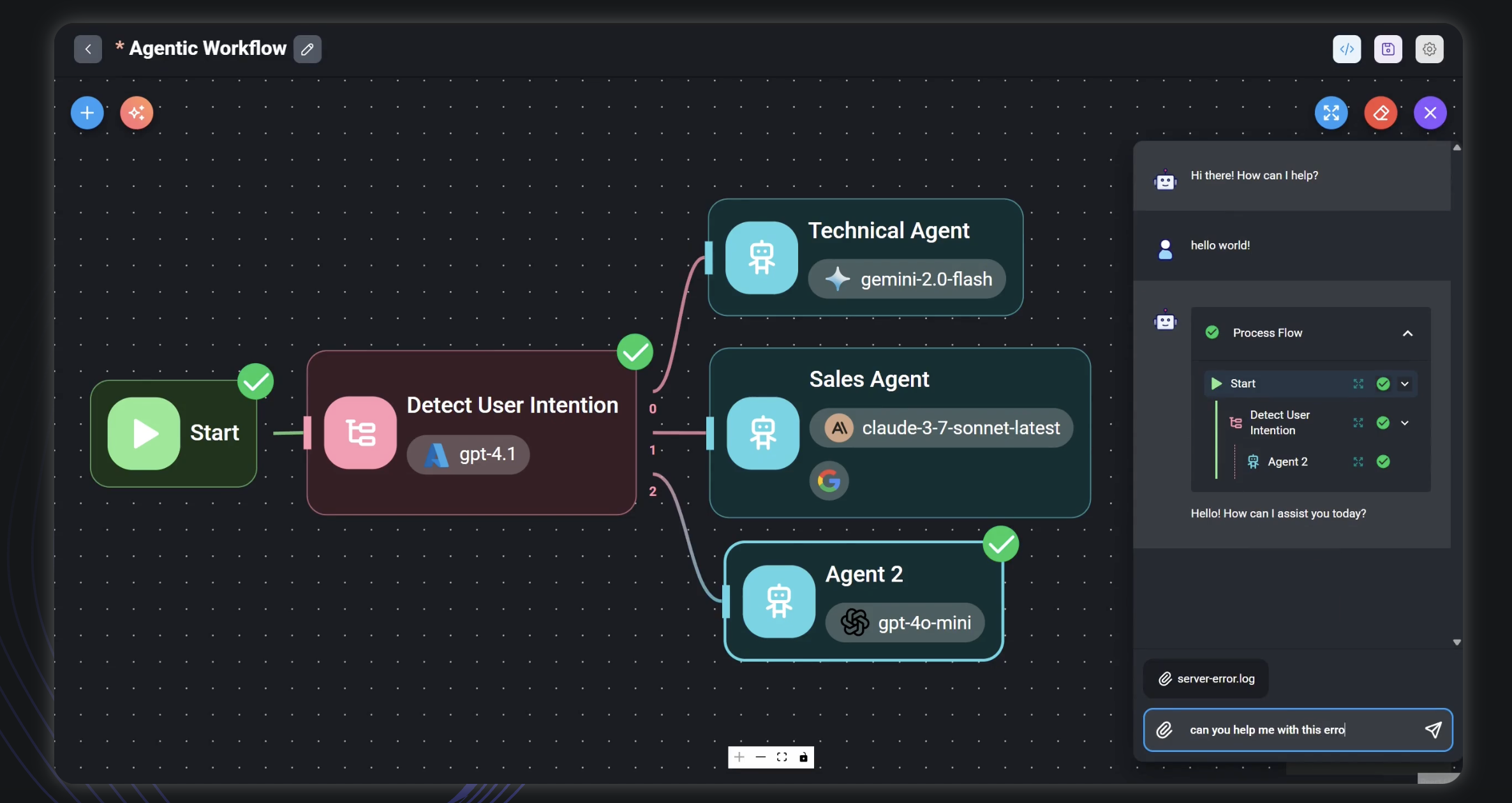

4. AutoGPT

Overview:

AutoGPT is an autonomous AI agent framework capable of executing multi-step, goal-driven workflows independently.

It chains models, tools, and APIs to autonomously plan, execute, and adapt without human intervention.

What Makes AutoGPT Different:

While LangChain supports component chaining, AutoGPT goes further to create fully autonomous systems capable of multi-scenario decision-making and self-management—making it highly suitable for complex, multi-layer automation.

Use Cases:

- Autonomous content creation & curation.

- Complex research and data analysis workflows.

- Self-operating customer support bots.

Pricing:

Open-source, free, supported by an active community.

| Aspect | Details |

|---|---|

| Strengths | Autonomous operation, goal-oriented workflows |

| Ideal For | Complex automation, self-operating AI agents |

| Comparison to LangChain | Focused on autonomous, goal-driven systems rather than chaining components |

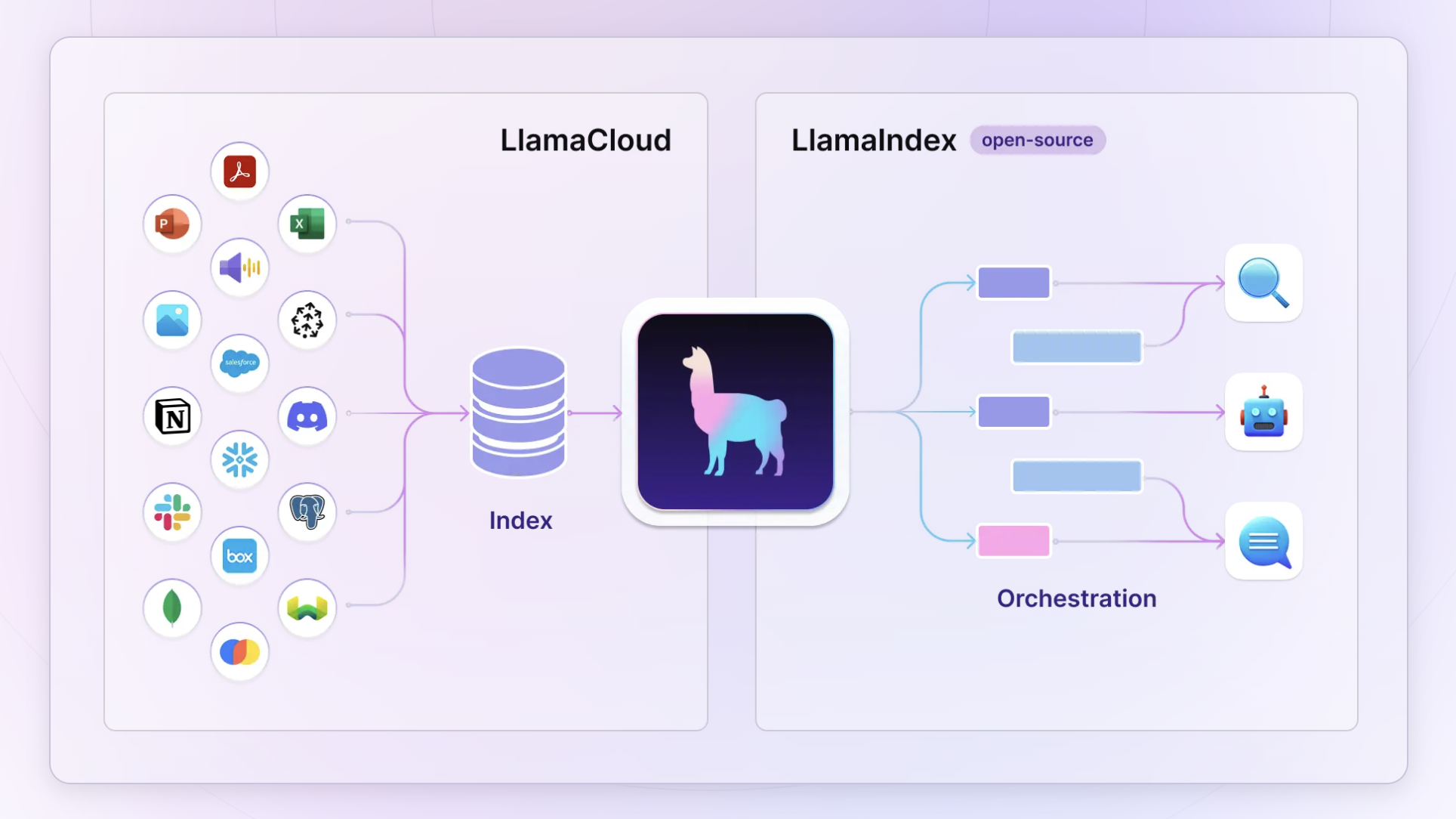

5. LlamaIndex

Overview:

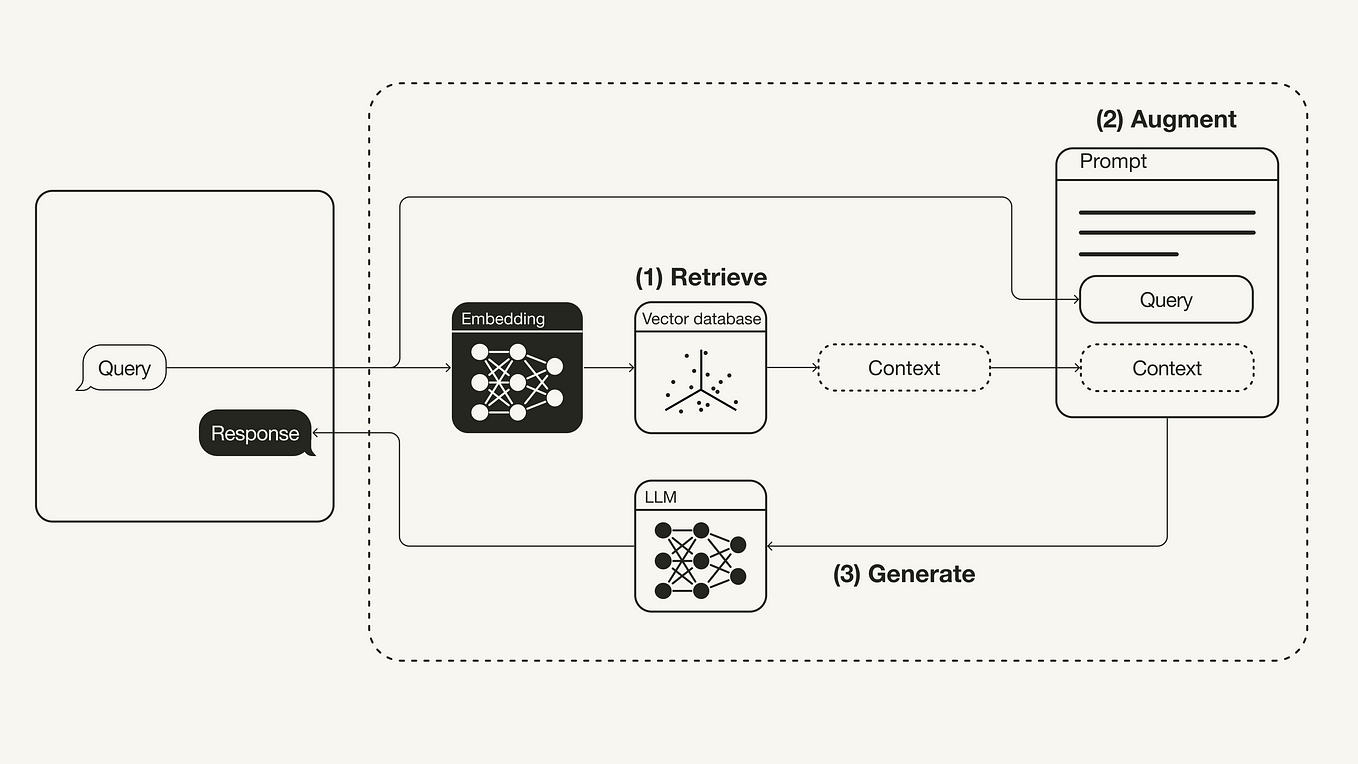

LlamaIndex (formerly GPT Index) specializes in data ingestion, indexing, and retrieval workflows. It excels at integrating large datasets and knowledge bases with LLMs to improve answer accuracy in retrieval-augmented generation (RAG) systems.

What Makes LlamaIndex Different:

While LangChain supports basic retrieval, LlamaIndex provides more control over data parsing, indexing, and retrieval processes, making it highly suitable for enterprise knowledge management and document retrieval workflows.

Use Cases:

- Building enterprise knowledge bases.

- Enhancing document QA systems.

- Dynamic data retrieval from large datasets.

Pricing:

Open-source and free.

| Aspect | Details |

|---|---|

| Strengths | Fine-grained data ingestion, advanced retrieval workflows |

| Ideal For | Knowledge management, document search, enterprise data integration |

6. Haystack

Overview:

Haystack is an NLP framework designed to build question-answering systems, document search engines, and retrieval-augmented workflows.

It’s highly modular, supporting multiple models and complex pipeline configurations.

What Makes Haystack Different:

It excels in large-scale information retrieval and hybrid RAG systems, with features that support extensive document management and scalable pipelines—often outperforming basic chaining frameworks in terms of search efficacy and flexibility.

Use Cases:

- Enterprise search & content discovery.

- Custom question-answering systems.

- Document management at scale.

Pricing:

Open-source and free.

| Aspect | Details |

|---|---|

| Strengths | Modular NLP pipelines, scalable document retrieval |

| Ideal For | Search-heavy applications, enterprise knowledge bases |

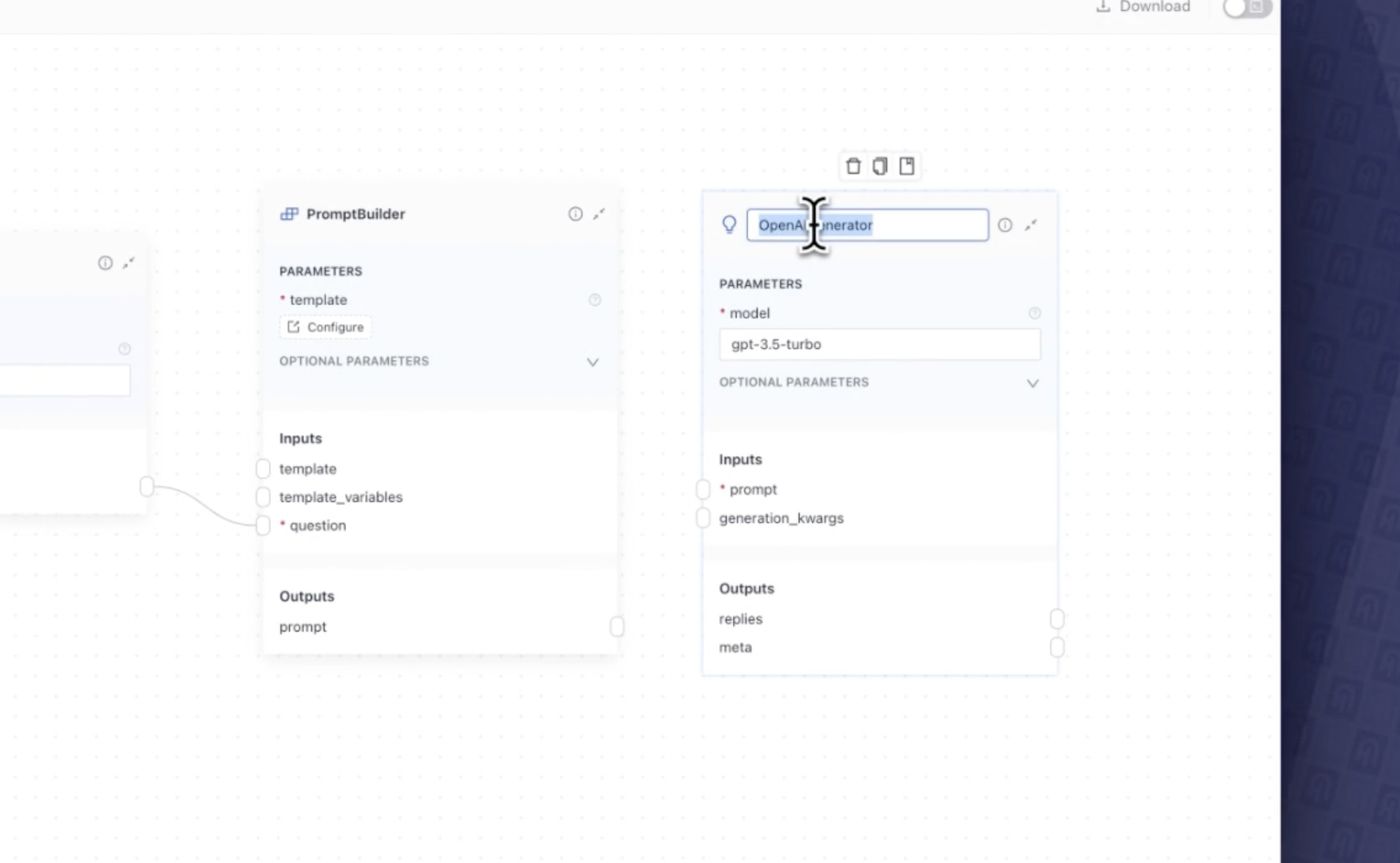

7. Flowise AI

Overview:

Flowise AI is a visual, low-code platform allowing rapid construction of LLM workflows through drag-and-drop interfaces.

It is designed for teams that want to prototype and deploy AI applications without extensive coding.

What Makes Flowise Different:

Unlike LangChain, which requires programming knowledge, Flowise empowers non-technical users by simplifying workflow building.

It’s perfect for quick ideation, MVPs, or teams aiming to accelerate deployment.

Use Cases:

- Rapid chatbot development.

- Workflow automation by non-developers.

- Quick deployment prototypes.

Pricing:

Open-source, with a free self-hosted version and paid cloud options.

| Aspect | Details |

|---|---|

| Strengths | Visual interface, fast prototyping, minimal coding |

| Ideal For | Non-technical teams, rapid MVP development |

| Comparison to LangChain | Simplifies complex pipeline setup into visual workflows |

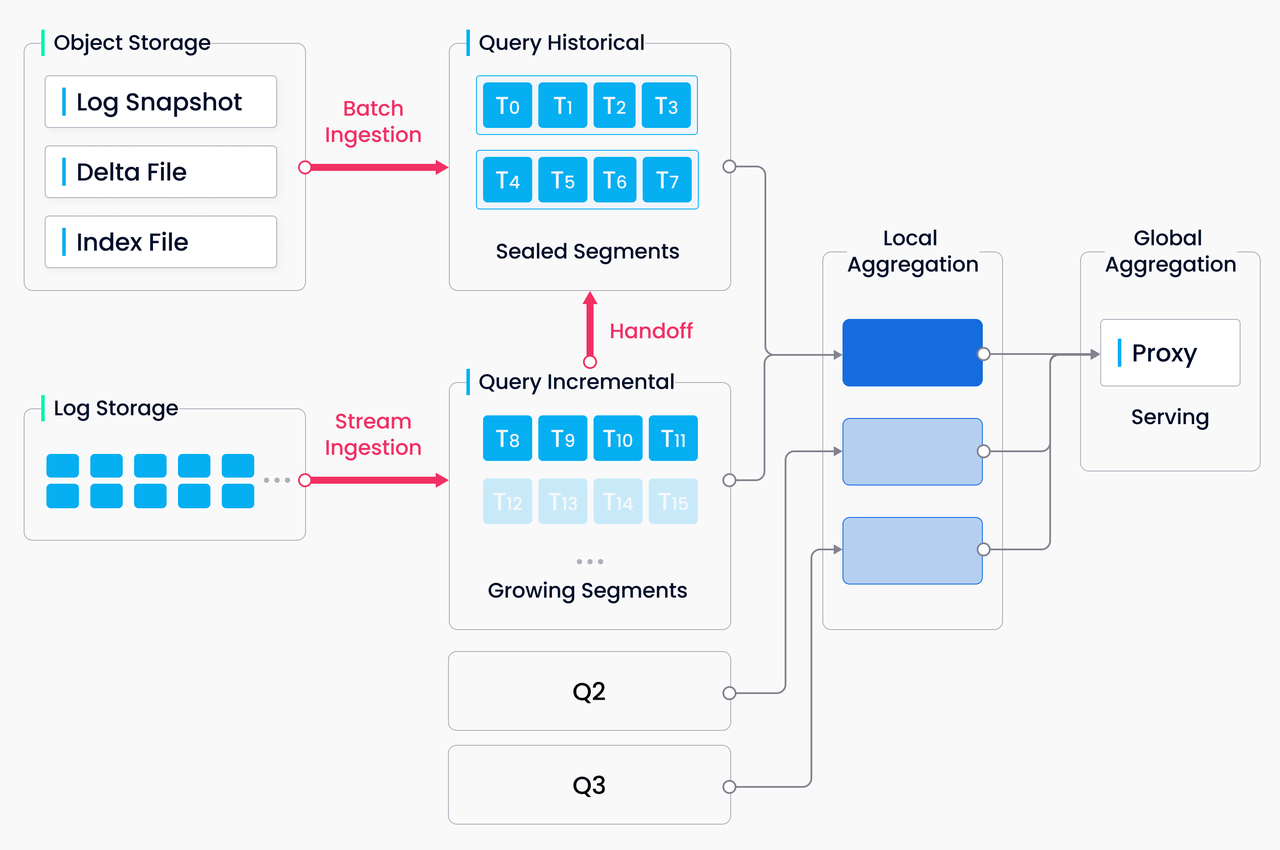

8. Milvus

Overview:

Milvus is a high-performance vector database optimized for large-scale similarity search based on embeddings.

It is scalable and supports real-time retrieval, making it essential for semantic search and recommendation systems.

What Makes Milvus Different:

While LangChain supports basic vector store integrations, Milvus is dedicated to enterprise scalability and ultra-fast, large-scale embedding similarity search capabilities.

It handles billions of vectors efficiently, powering advanced AI solutions.

Use Cases:

- Semantic search engines.

- Recommendation and personalization engines.

- Large-scale embedding storage and retrieval.

Pricing:

Open-source version available; commercial enterprise solutions provided under licensing.

| Aspect | Details |

|---|---|

| Strengths | High-speed similarity search, scalable architecture |

| Ideal For | Semantic search, embedding-intensive applications |

9. Weaviate

Overview:

Weaviate is an enterprise-grade vector database supporting scalable semantic search, knowledge graphs, and multi-modal data.

It offers real-time updates, integration with large datasets, and supports complex AI workflows.

What Makes Weaviate Different:

It extends basic vector search with native knowledge graph support and real-time, multi-modal data management, making it suitable for sophisticated, scalable AI applications requiring complex data relationships.

Use Cases:

- Knowledge graphs for enterprises.

- Large-scale semantic search applications.

- Content management requiring dynamic updates.

Pricing:

Offers free community edition and enterprise licensing options.

| Aspect | Details |

|---|---|

| Strengths | Knowledge graphs, real-time updates, multi-modal data support |

| Ideal For | Complex enterprise knowledge bases and large-scale semantic search |

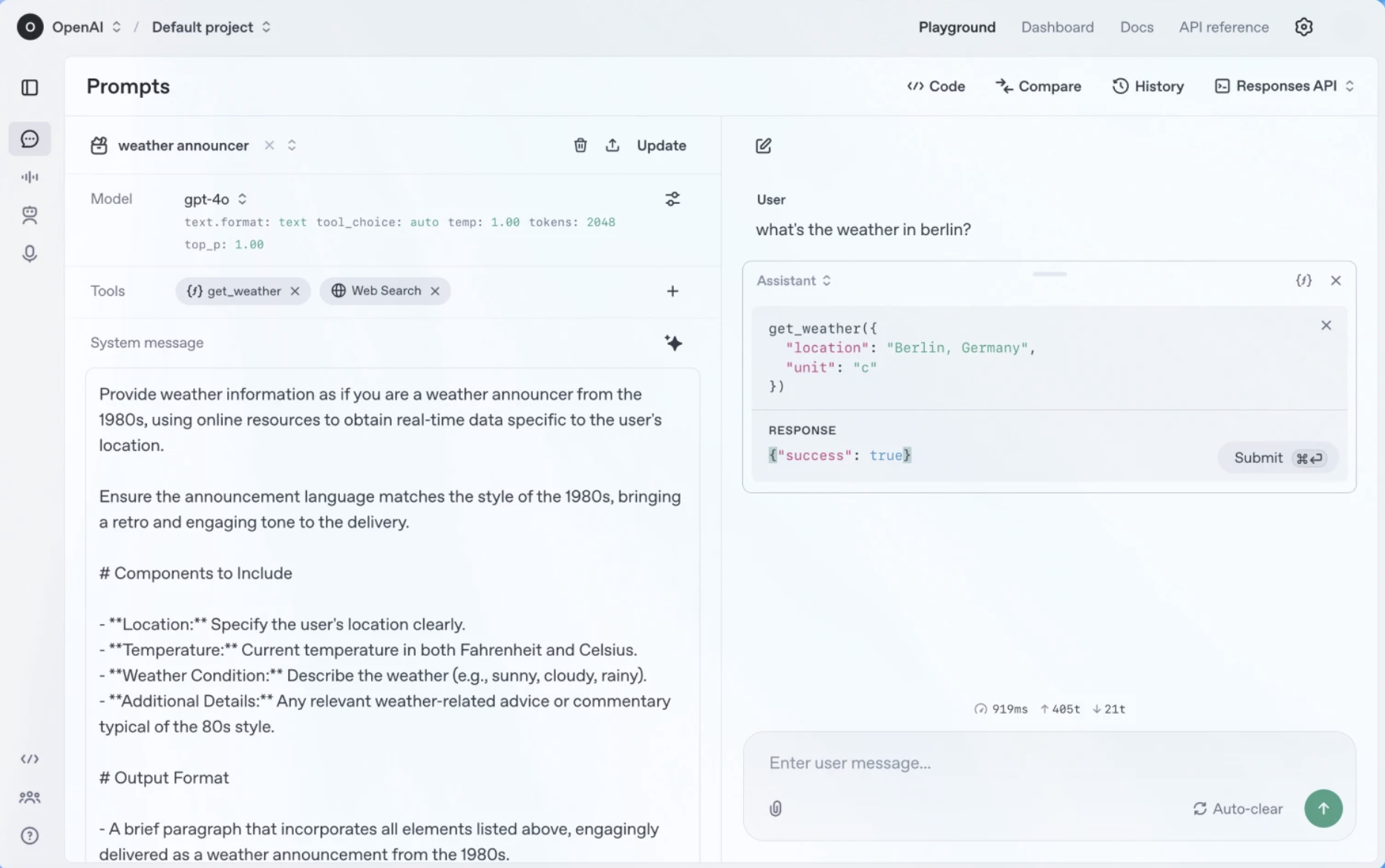

10. OpenAI & Direct Model APIs

Overview:

APIs from OpenAI, Hugging Face, and others allow direct access to models, giving developers full control.

This approach bypasses intermediary frameworks, enabling high customization and experimentation.

What Makes These APIs Different:

While LangChain simplifies chaining, direct APIs provide granular control over prompt design, model choice, and output handling—ideal for research, application-specific models, or innovation-driven projects.

Use Cases:

- Building custom NLP solutions.

- Conducting advanced research.

- Designing bespoke chatbots or generators.

Pricing:

Usage-based, pay-as-you-go model.

| Aspect | Details |

|---|---|

| Strengths | Full control, rapid experimentation |

| Ideal For | Research, custom models, innovative solutions |

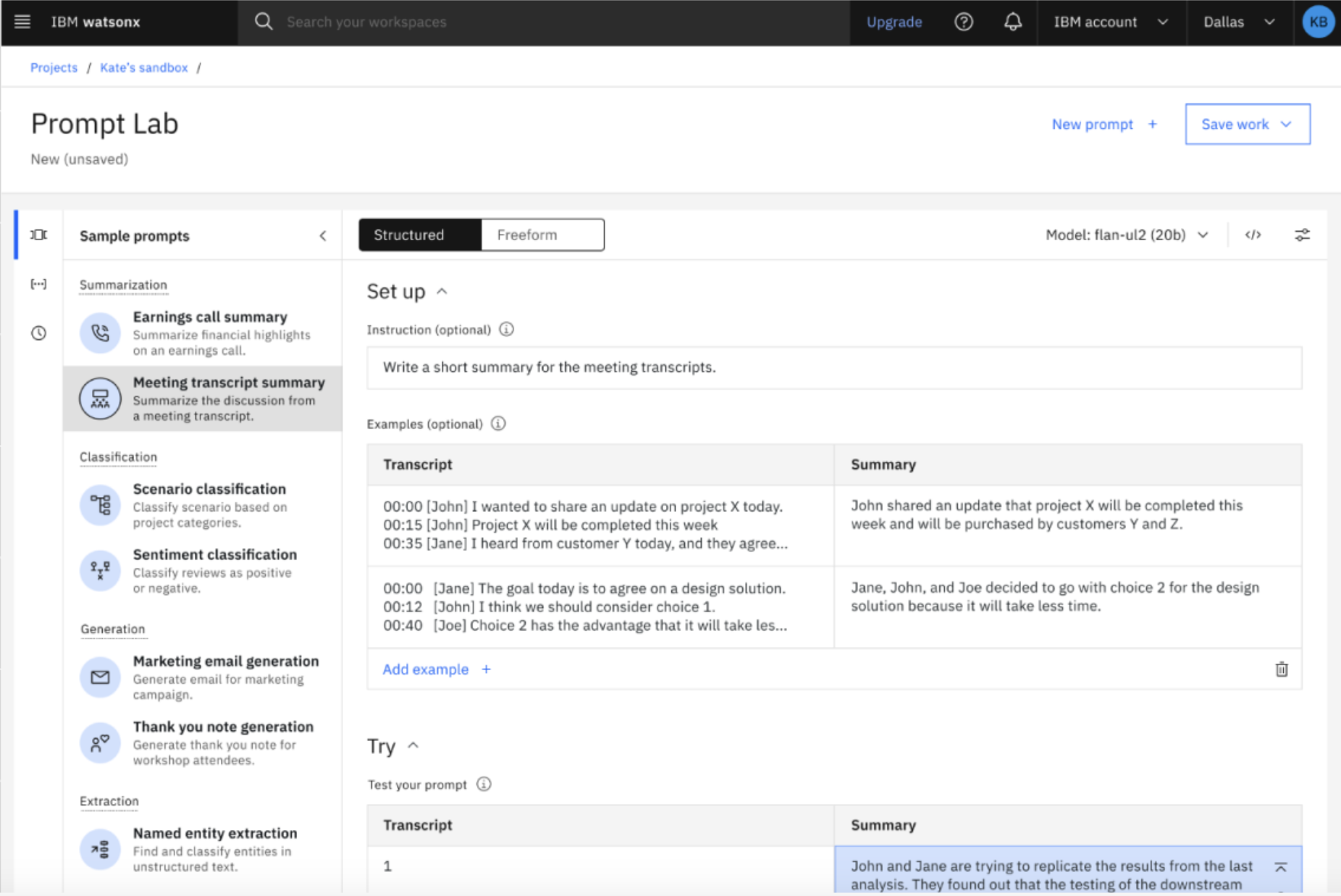

11. IBM Watsonx

Overview:

IBM Watsonx is a comprehensive enterprise AI platform designed for large-scale, secure, and compliant AI deployment.

It offers a suite of tools for data management, model training, fine-tuning, and deployment within a unified environment suited for regulated industries.

What Makes Watsonx Different:

Unlike frameworks like LangChain that emphasize open-source customization, Watsonx provides a fully managed, end-to-end platform.

It offers enterprise-grade security, governance, and compliance features, making it ideal for organizations where security and regulatory adherence are top priorities.

Use Cases:

- Large-scale deployment of NLP and computer vision models in finance, healthcare, or government sectors.

- Customized model training and fine-tuning with strict compliance requirements.

- AI solutions requiring integrated data security and governance.

| Aspect | Details |

|---|---|

| Strengths | Robust security, compliance, enterprise data handling |

| Ideal For | Regulated industries, large enterprises needing scalable AI solutions |

| Comparison to LangChain | Fully managed, enterprise environment versus DIY, open-source pipelines |

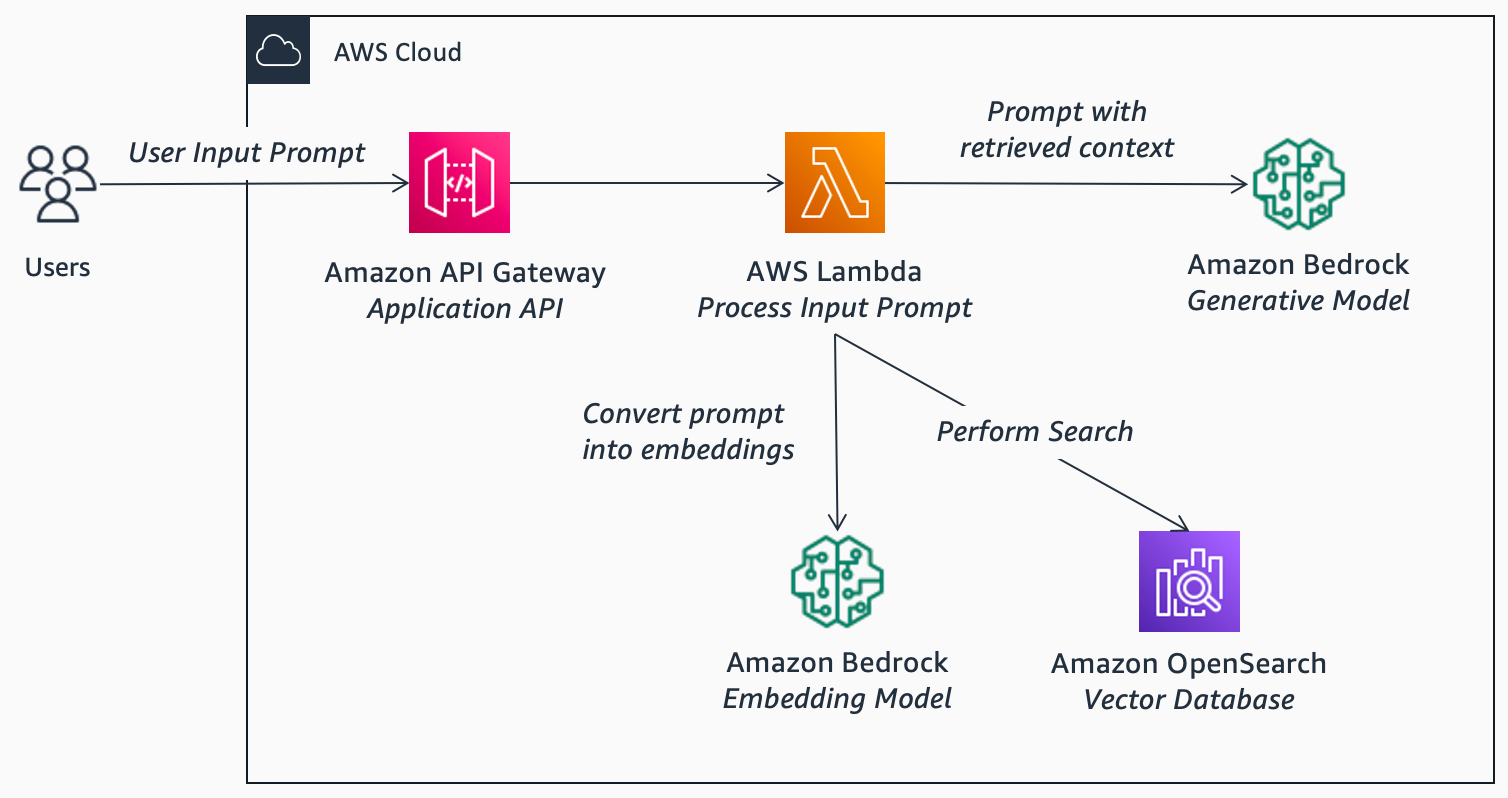

12. Amazon Bedrock

Overview:

Amazon Bedrock offers a managed platform in AWS for deploying multiple foundation models at scale.

It simplifies large-scale model hosting, inference, and updates, allowing quick deployment across regions.

What Makes Bedrock Different:

Unlike LangChain, which emphasizes customizable chaining, Bedrock provides a managed, cloud-native environment with ready-to-use foundation models, reducing the infrastructure overhead for enterprise deployment.

Use Cases:

- Cross-region, scalable AI services.

- Business automation at scale.

- Large-scale AI inference deployments.

| Aspect | Details |

|---|---|

| Strengths | Fully managed, scalable, multi-model support |

| Ideal For | Cloud-native enterprise AI, high availability |

| Comparison to LangChain | Managed deployment versus open-source, customizable chain |

13. Microsoft Azure AI

Overview:

Azure AI provides a comprehensive set of cloud-based AI services, including NLP, vision, and conversational AI.

It combines a visual pipeline builder with enterprise security and seamless data integration.

What Makes Azure Different:

It offers a fully integrated, cloud-native ecosystem, making it ideal for organizations already using Microsoft products.

It supports hybrid deployment, security, and compliance, bringing your AI workflows into a unified, scalable environment.

Use Cases:

- Hybrid cloud AI solutions.

- Large-scale enterprise automation.

- Integration with and extension of existing Microsoft systems.

| Aspect | Details |

|---|---|

| Strengths | Enterprise security, hybrid cloud, deep Microsoft ecosystem integration |

| Ideal For | Large organizations seeking seamless enterprise AI deployment |

| Comparison to LangChain | Managed, integrated cloud platform over modular open-source |

Strategic Takeaway

| Tool / Platform | Focus Area | Core Strengths | Best For | Cost / Availability |

|---|---|---|---|---|

| Vellum AI | Prompt engineering & testing | Precise control, fast iteration | Prompt refinement, NLP research | Tiered, varies |

| Mirascope | Validation & output reliability | Validation, traceability, debugging | Production, compliance-heavy systems | Free, open-source |

| Galileo | Debugging and diagnostics | Transparency, error insights | Model error analysis, iterative fine-tuning | Enterprise plans, custom tiers |

| AutoGPT | Autonomous multi-step workflows | Self-operating, goal-driven agents | Complex automation, autonomous decision-making | Free, community-supported |

| LlamaIndex | Data ingestion & retrieval | Knowledge management, indexing | Enterprise knowledge bases, document retrieval | Free, open-source |

| Haystack | NLP pipelines, document retrieval | Scalable, robust pipelines | Search engines, enterprise QA | Free, open-source |

| Flowise AI | Visual low-code workflow building | Drag-and-drop, rapid prototyping | Non-technical teams, fast deployment | Free, open-source |

| Milvus | Vector similarity search | Ultra-fast, scalable embedding search | Large-scale recommendation, semantic search | Free, paid options |

| Weaviate | Knowledge graphs & enterprise vector search | Multi-modal, real-time, enterprise support | Knowledge management, complex large-scale search | Free tier & enterprise licenses |

| OpenAI APIs | Direct, flexible API access | High customization, rapid experimentation | Custom models, research, innovative applications | Pay-as-you-go |

| IBM Watsonx | Enterprise AI platform | Secure, compliant, end-to-end environment | Regulated industries, large-scale enterprise AI | Enterprise licensing, varies |

| Amazon Bedrock | Managed multi-model deployment | Fully managed, scalable, cloud-native | Global AI services, high availability in AWS | Pay-as-you-go |

| Microsoft Azure AI | Cloud-based, enterprise-grade AI tools | Integrated, secure, hybrid-cloud capable | Business-critical applications, hybrid environments | Pay-as-you-go |

Enhancing Multilingual AI Workflows: Why Specialized Localization with OneSky Matters

As companies expand globally, deploying AI that supports multiple languages becomes crucial.

Many AI frameworks discussed—like AutoGPT, LlamaIndex, or Haystack—offer advanced automation and multi-agent capabilities.

However, they often don’t include dedicated tools for multilingual content management and localization.

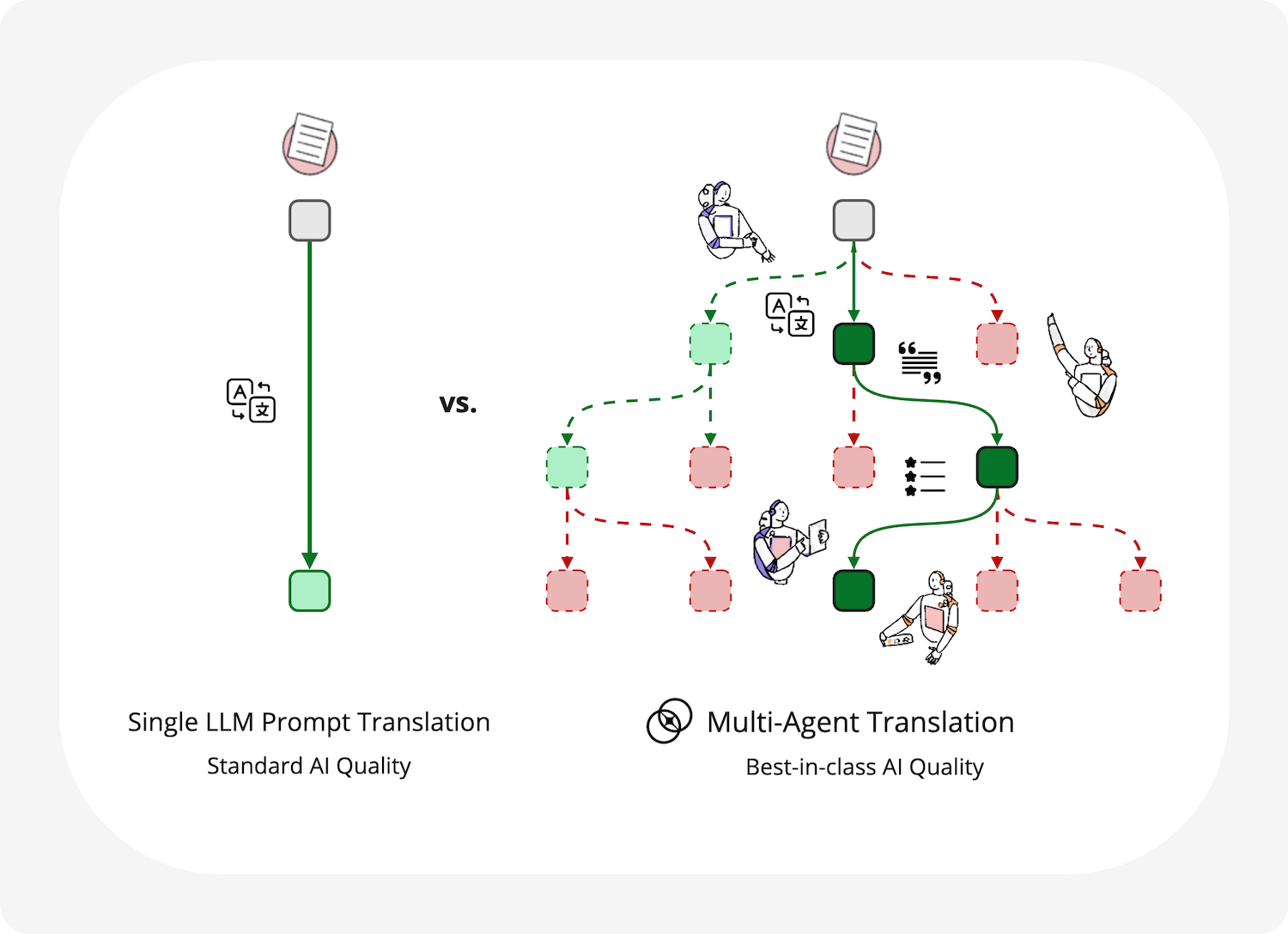

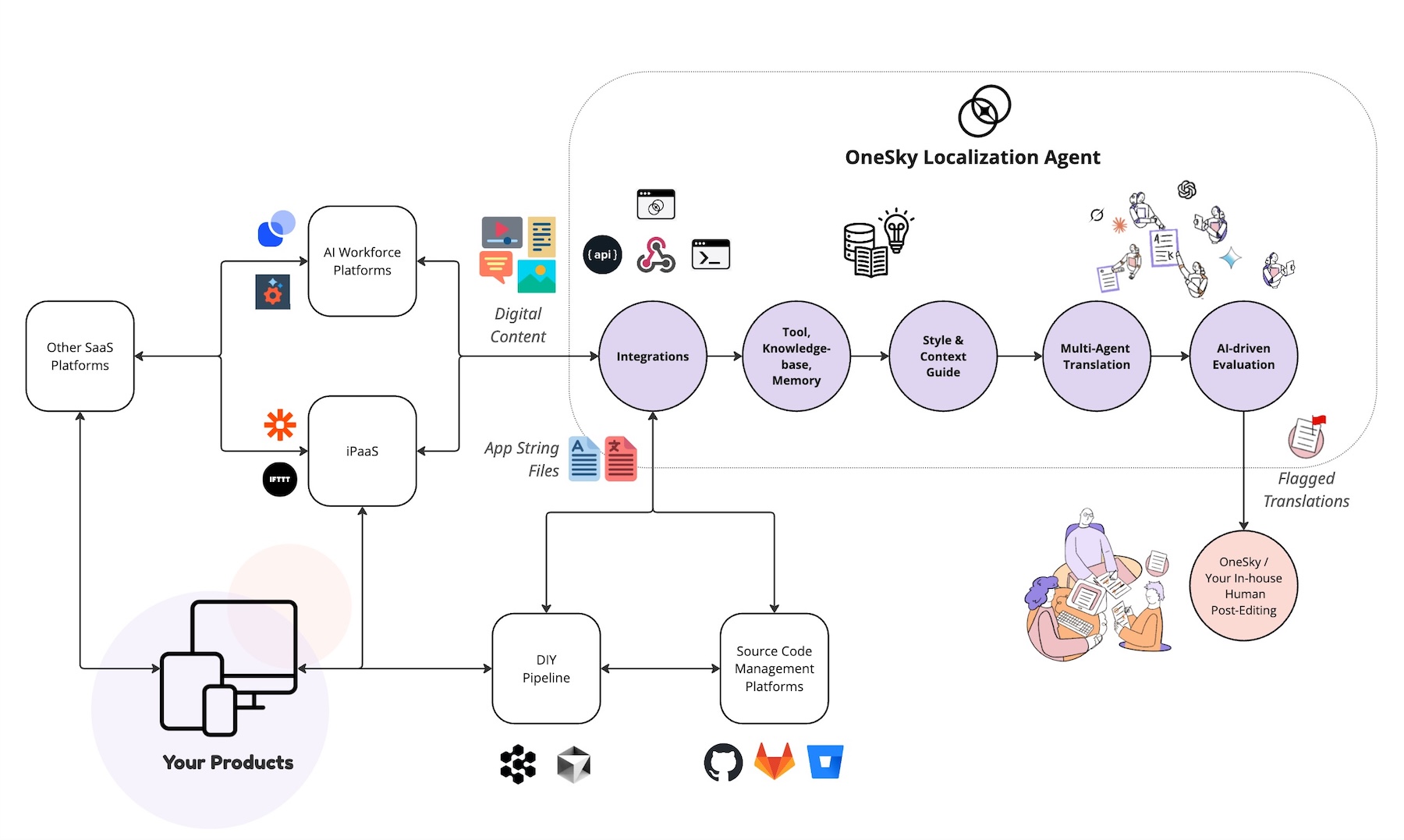

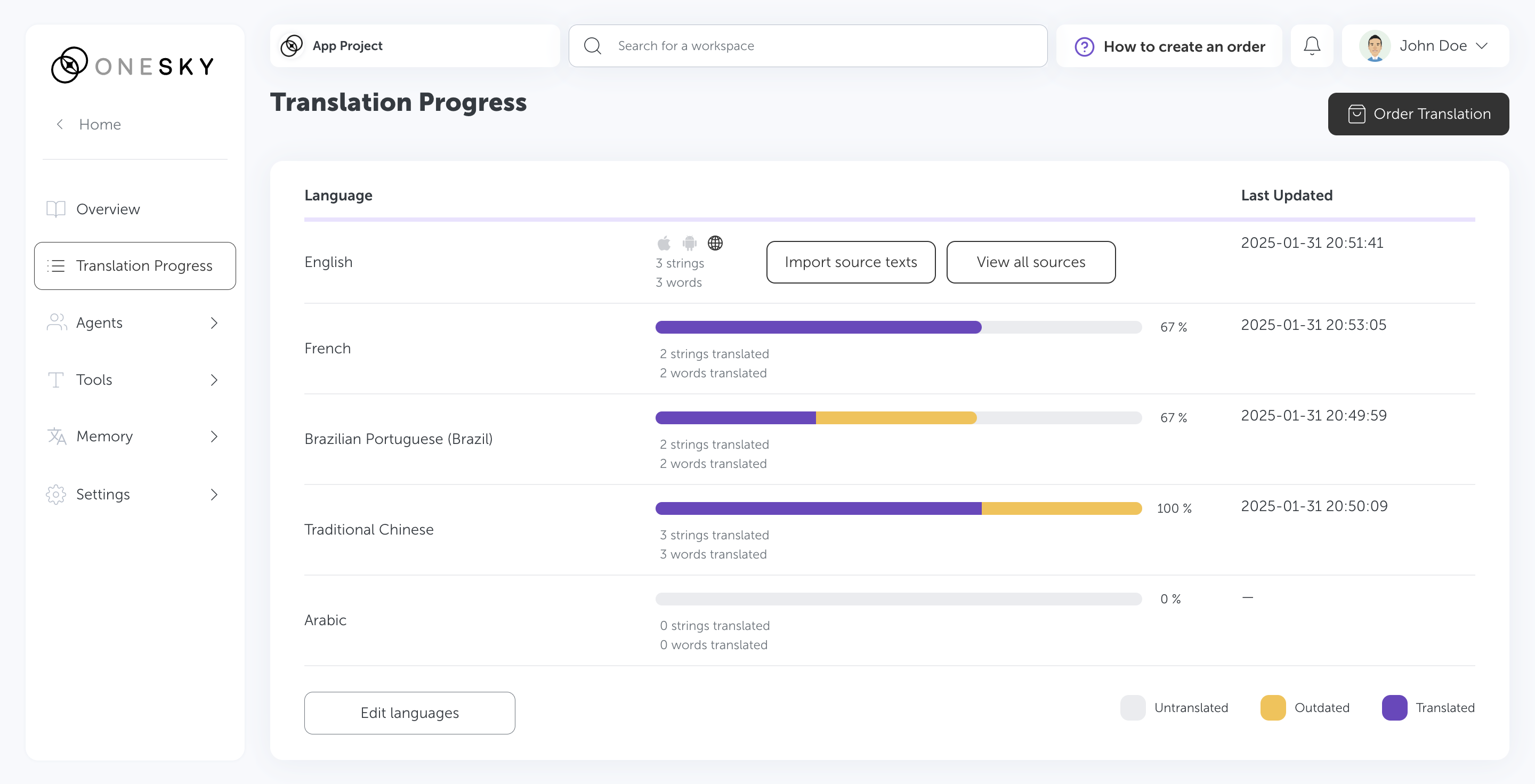

This is where OneSky Localization Agent (OLA) stands out.

It is a purpose-built, AI-driven solution designed specifically for content translation and localization workflows.

It goes beyond simply automating translation; it integrates high-quality, context-aware localization into your existing AI systems.

What makes OLA different and how does it improve your multilingual process?

1. Specialized multi-agent orchestration

Unlike general AI workflows, OLA is optimized to coordinate teams of AI agents focused on language translation.

These agents handle preprocessing, language nuances, cultural adaptation, and tone preservation—all automatically.

2. High-quality, scalable translations

Manual translation is slow and costly, especially at scale.

OLA uses advanced machine translation models combined with context management to generate consistent, high-quality content quickly. This saves both time and money.

3. Ongoing quality monitoring and learning

OLA doesn’t just translate content and move on.

It tracks translation quality with feedback from linguistic experts and AI agents.

This feedback loop helps improve your localization quality over time, maintaining brand consistency and building user trust.

4. Seamless integration with existing workflows

Whether you’re using AutoGPT, Haystack, or LlamaIndex, OLA can be integrated into your current AI architecture.

It turns a standard automation pipeline into a content localization system capable of handling real-time, multi-language content effortlessly.

How does this push things further?

Most AI systems focus on content generation, retrieval, or basic automation.

They lack specific features for nuanced, multi-language content adaptation. OLA addresses this gap.

It provides an AI-powered, scalable, and adaptable localization ecosystem that enhances your ability to deliver high-quality, localized content worldwide—quickly and consistently.

In simple terms, adding OneSky Localization Agent to your AI workflow doesn’t just automate translation; it makes your entire multilingual content process smarter, faster, and more reliable.

This allows you to serve international markets more effectively, with less manual effort and higher quality.

How to Choose the Right Framework?

- Prompt refinement & versioning: Vellum AI, Mirascope

- Error diagnosis & model tuning: Galileo

- Complex autonomous workflows: AutoGPT, MetaGPT

- Large-scale knowledge & retrieval: LlamaIndex, Haystack, Milvus, Weaviate

- Low-code rapid prototyping: Flowise AI

- Enterprise-scale deployment: IBM watsonx, Amazon Bedrock, Azure AI

- Multilingual applications & localization: OneSky Localization Agent (OLA)*

Note: OLA seamlessly integrates multi-agent orchestration tailored for localization workflows, improving efficiency in multilingual AI content deployment.

Final Insights

In today’s AI landscape, the best tool depends on your specific use case, team skills, and infrastructure.

Whether you need deep customization, fast prototyping, scalable enterprise support, or multilingual content localization, there is an alternative suited for you.

Ready to Take Multilingual AI Content to the Next Level?

If your organization is aiming for seamless, high-quality multilingual content deployment at scale, OneSky Localization Agent (OLA) is your essential partner.

By integrating AI-powered localization into your existing workflows, OLA helps you deliver accurate, culturally aware content faster, more reliably, and at lower costs.

Discover how OLA can enhance your global expansion initiatives.

Start your free trial today and see firsthand how autonomous multi-agent localization can transform your content management—making your international reach more efficient and impactful.

Frequently Asked Questions (FAQ)

Q1: Why explore alternatives to LangChain?

LangChain is powerful, but it can be inflexible for rapid development and deep customization.

If you need faster prototyping, better control over your data, or multi-language support, alternative tools can give you a strategic advantage.

Q2: How do I choose the best framework for my project?

Start by defining your main priorities:

- Need quick prototypes? Try low-code platforms like Flowise.

- Building scalable, secure enterprise solutions? Consider IBM Watsonx or Azure AI.

- Supporting multiple languages and markets? Use OneSky Localization Agent (OLA) to simplify localization workflows.

Q3: Why should I consider using OneSky Localization Agent (OLA)?

OLA automates content translation across many languages with high accuracy.

It orchestrates AI agents to adapt, translate, and analyze multilingual content—saving time and ensuring cultural relevance at scale.

Q4: Can I combine AI frameworks with localization tools?

Yes.

Many frameworks support seamless integration with tools like OLA, allowing you to automate translation and localization directly within your existing workflows—reducing manual effort and improving consistency.

Q5: Why is scalable, compliant AI deployment so crucial?

For large organizations, security, compliance, and scalability are critical.

Platforms like Watsonx, Bedrock, and Azure provide enterprise-grade features, making it safe and efficient to deploy AI solutions worldwide.

Q6: How does selecting the right tools accelerate innovation?

The optimal tools reduce development time, simplify testing, and enable faster deployment.

Low-code platforms like Flowise speed up prototyping, while enterprise solutions support large-scale rollouts.

Integrating tools like OLA unlocks rapid, high-quality localization for global markets.

Q7: What are the benefits of multi-agent orchestration?

It coordinates different AI functions—such as content creation, validation, and translation—working together smoothly.

This approach makes your systems more scalable, autonomous, and capable of tackling complex tasks faster.

Written by

Written by